What is Kubernetes?

Kubernetes is a portable, adaptable open-source framework for containerized workload deployment, scaling, and management. Kubernetes abstracts away difficult container management and gives us declarative configuration to orchestrate containers in various computing environments. We have the same flexibility and convenience of use with this orchestration platform as we have with Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) solutions.

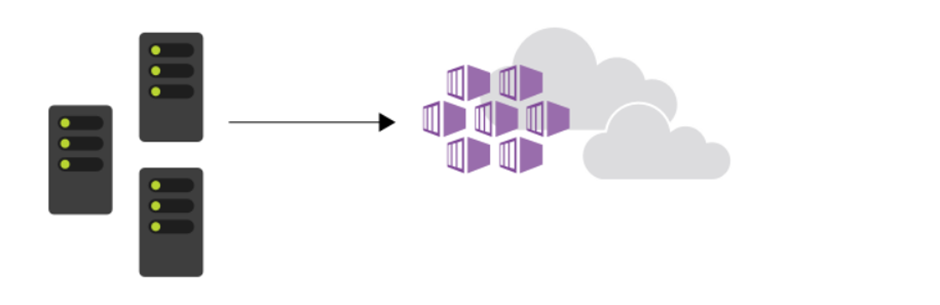

Kubernetes allows you to view your data center as one large computer. We don’t worry about how and where we deploy our containers, only about deploying and scaling our applications as needed.

However, this view might be slightly misleading as there are a few features as follows to keep in mind.

Features of Kubernetes:

- Kubernetes is not a complete PaaS solution. It merely provides a standard set of PaaS capabilities and functions at the container level.

- Kubernetes isn’t monolithic. It’s not a single application that is installed. Aspects such as deployment, scaling, load balancing, logging, and monitoring are all optional. You’re responsible for finding the best solution that fits your needs to address these aspects.

- The sorts of apps that can execute are not constrained by Kubernetes. Your application can run on Kubernetes if it can run in a container. To leverage container solutions to their full potential, your engineers must be familiar with ideas like microservices architecture.

- Middleware, data-processing frameworks, databases, caches, and cluster storage systems are not offered by Kubernetes. All of these products are operated as containers or as a component of another service.

- A Kubernetes deployment is set up as a cluster. At least one master machine and one or more workforce machines make up a cluster. The optimal master configuration for deployments in production is a multi-master high availability deployment with three to five replicated masters. These computers might be virtual machines (VMs) or actual hardware. Nodes or agent nodes are the names given to these worker devices.

With all the benefits you receive with Kubernetes, keep in mind that you’re responsible for maintaining your Kubernetes cluster. For example, you need to manage OS upgrades and the Kubernetes installation and upgrades. You also manage the hardware configuration of the host machines, such as networking, memory, and storage.

Azure Kubernetes Service:

The Azure Kubernetes Service (AKS) simplifies the deployment and management of containerized applications in Azure while managing your hosted Kubernetes environment. Your AKS environment is equipped with functions like automatic upgrades, self-healing, and simple scaling. The Kubernetes cluster master is free and maintained by Azure. You control the cluster’s agent nodes, and you only have to pay for the VMs that your nodes use.

An AKS cluster can be created using:

- Azure CLI: The Azure Command-Line Interface (CLI) is a cross-platform command-line tool that can be installed locally on Windows computers. You can use the Azure CLI for Windows to connect to Azure and execute administrative commands on Azure resources.

- Azure PowerShell: Azure PowerShell is a set of cmdlets for managing Azure resources directly from PowerShell. Azure PowerShell is designed to make it easy to learn and get started with, but provides powerful features for automation.

- Azure Portal: The Azure portal is a web-based, unified console that provides an alternative to command-line tools. With the Azure portal, you can manage your Azure subscription using a graphical user interface. You can build, manage, and monitor everything from simple web apps to complex cloud deployments in the portal.

Azure Kubernetes Service Benefits

Azure Kubernetes Service is currently competing with both Amazon Elastic Kubernetes Service (EKS) and Google Kubernetes Engine (GKE). It offers numerous features such as creating, managing, scaling, and monitoring Azure Kubernetes Clusters, which is attractive for users of Microsoft Azure. The following are some benefits offered by AKS:

- Efficient resource utilization: The fully managed AKS offers easy deployment and management of containerized applications with efficient resource utilization that elastically provisions additional resources without the headache of managing the Kubernetes infrastructure.

- Faster application development: Developers spent most of the time on bug-fixing. AKS reduces the debugging time while handling patching, auto-upgrades, and self-healing and simplifies the container orchestration. It definitely saves a lot of time and developers will focus on developing their apps while remaining more productive.

- Security and compliance: Cybersecurity is one of the most important aspects of modern applications and businesses. AKS integrates with Azure Active Directory (AD) and offers on-demand access to the users to greatly reduce threats and risks. AKS is also completely compliant with the standards and regulatory requirements such as System and Organization Controls (SOC), HIPAA, ISO, and PCI DSS.

- Quicker development and integration: Azure Kubernetes Service (AKS) supports auto-upgrades, monitoring, and scaling and helps in minimizing the infrastructure maintenance that leads to comparatively faster development and integration. It also supports provisioning additional compute resources in Serverless Kubernetes within seconds without worrying about managing the Kubernetes infrastructure.

Common uses for Azure Kubernetes Service (AKS):

- Lift and shift to containers with AKS : Easily migrate existing applications to containers and run them in a fully managed Kubernetes service with AKS.

- Microservices with AKS : Use AKS to simplify the deployment and management of microservices-based architecture. AKS streamlines horizontal scaling, self-healing, load balancing, and secret management.

- Secure DevOps for AKS : Kubernetes and DevOps are better together. Achieve the balance between speed and security, and deliver code faster at scale, by implementing secure DevOps with Kubernetes on Azure.

- Bursting from AKS with ACI : Use the AKS virtual node to provision pods inside ACI that start in seconds. This enables AKS to run with just enough capacity for your average workload.

- Machine learning model training with AKS : Training models using large datasets is a complex and resource-intensive task. Use familiar tools such as TensorFlow and Kubeflow to simplify training of machine learning models.

- Data streaming scenario : Use AKS to easily ingest and process a real-time data stream with millions of data points collected via sensors. Perform fast analysis and computations to develop insights into complex scenarios quickly.

When to use AKS:

Here, we’ll discuss how you can decide whether Azure Kubernetes Service (AKS) is the right choice for you.

You’ll either approach your decision from a green fields or a lift-and-shift project point of view. A green fields project will allow you to evaluate AKS based on default features. A lift-and-shift project will force you to look at which features are best suited to support your migration.

We saw earlier that there are several features that enhance the AKS Kubernetes offering. Each of these features can be a compelling factor in your decision to use AKS.

| Identity and security management | Do you already use existing Azure resources and make use of Azure AD? You can configure an AKS cluster to integrate with Azure AD and reuse existing identities and group membership. |

| Integrated logging and monitoring | AKS includes Azure Monitor for containers to provide performance visibility of the cluster. With a custom Kubernetes installation, you normally decided on a monitoring solution that requires installation and configuration. |

| Auto Cluster node and pod scaling | Deciding when to scale up or down in large containerization environment is tricky. AKS supports two auto cluster scaling options. You can use either the horizontal pod auto scaler or the cluster auto-scaler to scale the cluster. The horizontal pod auto-scaler watches the resource demand of pods and will increase pods to match demand. The cluster auto-scaler component watches for pods that can’t be scheduled because of node constraints. It will automatically scale cluster nodes to deploy scheduled pods. |

| Cluster node upgrades | Do you want to reduce the number of cluster management tasks? AKS manages Kubernetes software upgrades and the process of cordoning off nodes and draining them to minimize disruption to running applications. Once done, these nodes are upgraded one by one. |

| GPU enabled nodes | Do you have compute-intensive or graphic-intensive workloads? AKS supports GPU enabled node pools. |

| Storage volume support | Is your application stateful, and does it require persisted storage? AKS supports both static and dynamic storage volumes. Pods can attach and reattach to these storage volumes as they’re created or rescheduled on different nodes. |

| Virtual network support | Do you need pod to pod network communication or access to on-premise networks from your AKS cluster? An AKS cluster can be deployed into an existing virtual network with ease. |

| Ingress with HTTP application routing support | Do you need to make your deployed applications publicly available? The HTTP application routing add-on makes it easy to access AKS cluster deployed applications. |

| Docker image support | Do you already use Docker images for your containers? AKS by default supports the Docker file image format. |

| Private container registry | Do you need a private container registry? AKS integrates with Azure Container Registry (ACR). You aren’t limited to ACR though, you can use other container repositories, public, or private. |

Amazon EKS Vs. AKS:

Developers love containerization, and Kubernetes (K8s) is the best open-source system for deploying and managing multi-container applications at scale.

However, a significant challenge is choosing the best managed Kubernetes service for your application development.

| Amazon EKS | Azure AKS | |

| High availability of Clusters | EKS provides HA across both workers, and master nodes spanned over different accessibility zones | AKS provides a manual approach to HA deployed in Availability Zones, for greater availability. |

|---|---|---|

| SLA | EKS guarantees 99.95% uptime | No SLA for free version $.10 per cluster per hour providing uptime of 99.95% for the Kubernetes API server for clusters that use Azure Availability Zone and 99.9% for clusters that do not use Azure Availability Zone. |

| SLA financially-backed | Yes | Yes, for extra fee |

| Resource Availability with Node Pools | EKS provides functionality for node pooling | AKS does not provide node grouping and recommends different clusters in different scenarios |

| Scalability | EKS provides auto-scaling policies, but have to be set manually | AKS provides Cluster Autoscaler by default |

| Control plane: log collection | Logs are sent to AWS CloudWatch | Logs are sent to Azure Monitor |

| Bare Metal Clusters | EKS will support the use of bare metal nodes via Amazon EKS Anywhere | AKS does not support bare metal nodes |

| GPU support | Yes (NVIDIA); user must install device plugin in cluster | Yes (NVIDIA); user must install device plugin in cluster |

| Resource Limits | EKS handles limits are per account | AKS handles limits per subscription |

| Resource monitoring | EKS offers Insights Metrics | AKS offers Azure Monitor |

| Secure Image Management | Elastic Container Registry | Azure Container Registry |

| CNCF Kubernetes Conformance | Yes | Yes |

AKS and EKS are two of the biggest cloud computing service providers. They offer mature services that guarantee consistency in performance.

When choosing a cloud provider to use, it’s important to consider your level of expertise, familiarity with each offering, and the total cost.

With EKS, you get high availability at no extra cost and more customization options with than you get with Azure Kubernetes Services.