AWS SageMaker is Amazon’s cloud offering to create, train and deploy machine learning models at scale in the cloud. With SageMaker it also becomes easy to make these processes automated so that the developers can utilize.

While training a machine learning, ML, model might be ideal to tailor it one’s use case and have it trained on the private dataset. But with advances in transformer models most ML tasks can be performed with a pretrained model. By utilizing transfer learning, it is now possible to take a pretrained model and have it trained on your custom dataset to train the model to fit your specific use case.

With the increased of access of open pretrained models and datasets, this is has become more easier to accomplish. HuggingFace is an open repository of pretrained models and datasets, with their own libraries to streamline training and deploying ML models. AWS and HuggingFace have worked an integration via SageMaker where the process of training or deploying your ML model has become as easy as copying a set of command into AWS SageMaker.

For the rest of the blog, I would explain how to deploy a pretrained ML model from HuggingFace to AWS SageMaker. At end, I would show how to get inference from the deployed model.

Pretrained Models in HuggingFace

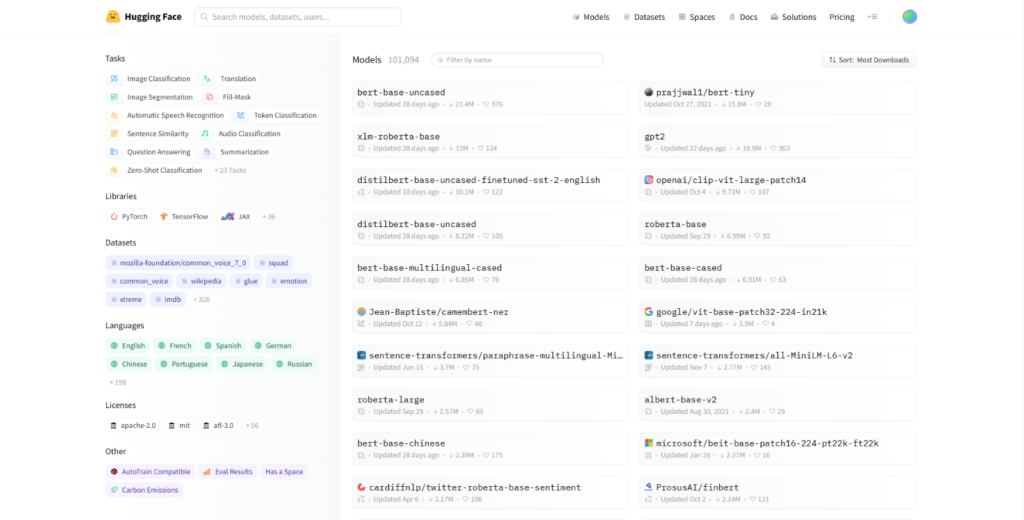

HuggingFace has organized their repository by the ML tasks so searching for a model is very easy.

A snapshot of different filters and models available in HuggingFace

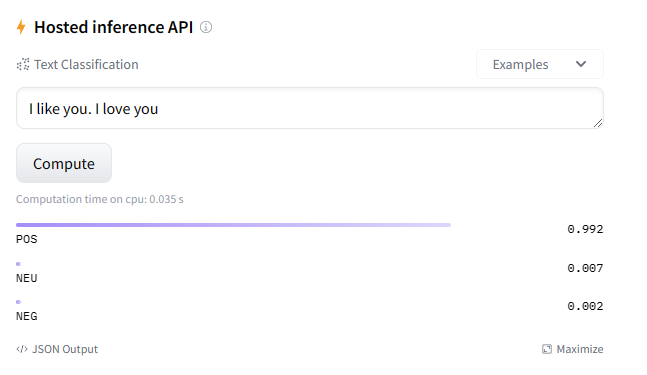

For this exercise, I am choosing the pretrained model, ‘finiteautomata/bertweet-base-sentiment-analysis’ which calculates the sentiment of a tweet.

Snapshot of the hosted API in HuggingFace to test the pretrained model

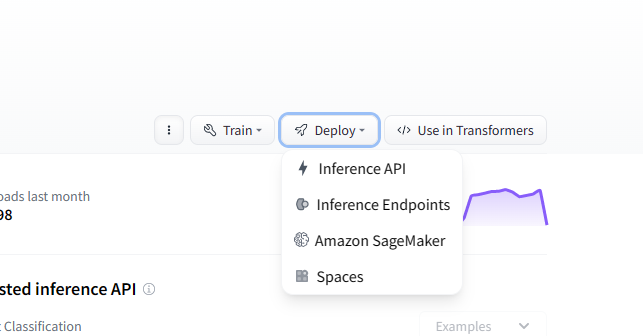

Once you have identified the model to use, you can click on deploy and see the option for Amazon SageMaker as shown below

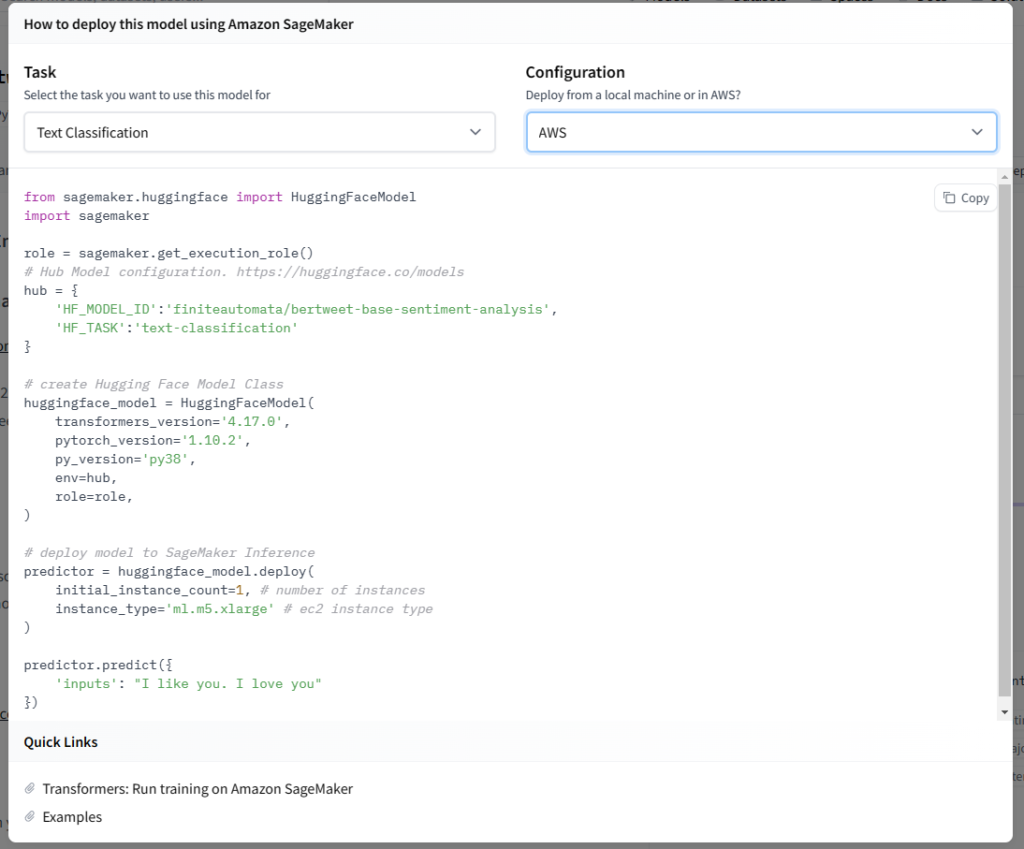

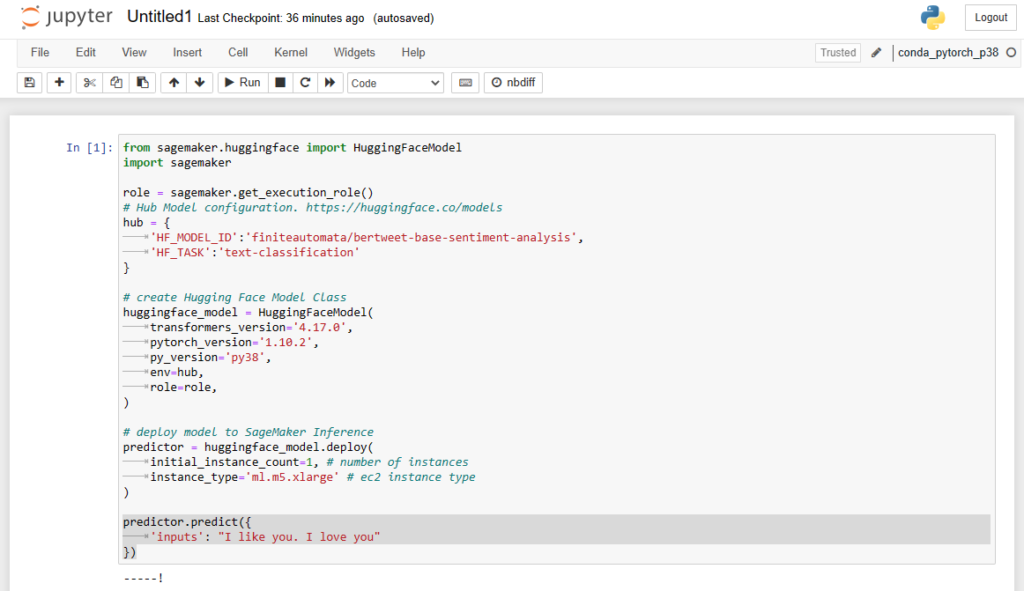

Once you choose the Task and Configuration, you would get the sample commands to get the model up and running in AWS SageMaker.

Sample code to deploy for the finiteautomata/bertweet-base-sentiment-analysis

AWS SageMaker

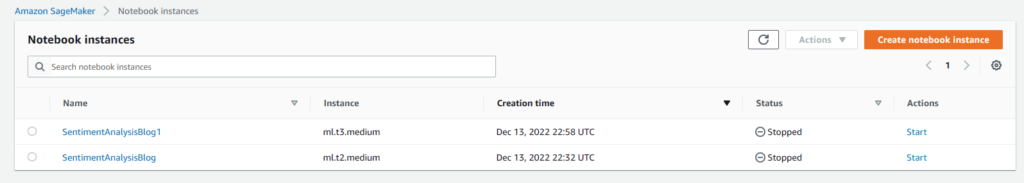

First step would be to be get a Notebook instance created in AWS SageMaker.

Once the notebook is up and running, navigate inside the notebook and click open Jupyter notebook.

Once inside you would need to create a new conda_pytorch_p38 notebook and the copy in your copied code from earlier.

If required, complete any required changes and click on run. Depending on the model chosen, it will take few minutes for the execution to complete.

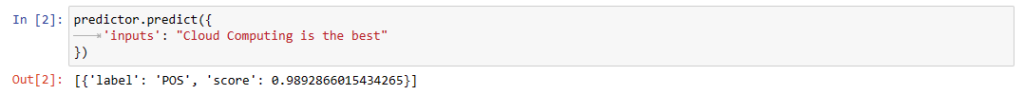

Once the execution is complete, it is easy to test the inference of the model as shown below

With this example deployment, I hope I have given enough encouragement to deploy your idea on scale.

The following video is a guide to deploying a transformers model from HuggingFace to AWS SageMaker