Ever needed to host a simple static website? Would you like to be able to create and upload the required files to S3 and never once touch the AWS console? With the python AWS SDK (software development kit), boto3, it is easy to configure an s3 bucket to host a static website as well as upload the required files to s3.

In this tutorial, learn about:

- the python AWS SDK, boto3

- setting up a python program to interact with AWS S3

- using S3 as a static website host

Requirements

In this tutorial, it is assumed that a few tools are already installed in the programming environment. Some of these include:

- python installation, version 3.8 or newer

- AWS CLI installed, version 2 or newer

- IDE/text editor, VS Code is highly recommended

- access to a terminal

This walkthrough also assumes that the program will be run in a Unix-like environment, such as Ubuntu 20.4 LTS. This tutorial has not been tested in Windows, or any other environment.

If python or the AWS CLI are not already installed in the Unix-like environment used, these links should help:

Installing python 3.8 in Ubuntu 20.04 LTS https://www.digitalocean.com/community/tutorials/how-to-install-python-3-and-set-up-a-programming-environment-on-an-ubuntu-20-04-server

Installing AWS CLI 2.X in Ubuntu 20.04 LTS https://docs.aws.amazon.com/cli/latest/userguide/getting-started-install.html

Setting Up the Environment

First, set up the environment to allow a connection to AWS and work with the required python package.

The Environment Variables

The following is a simple way to configure the AWS CLI to interact with the users AWS account.

Run the following line of code in the terminal to begin the process

aws configureNext you will prompted for the following information, in order:

- Access Key ID

- Secret Access Key

- AWS Region – the region you will use for your AWS account

- Output format – this tutorial uses JSON, but there are other options such as yaml, text, table, etc.

The access key ID and secret access keys can be found and set up with this link: https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-quickstart.html#cli-configure-quickstart-creds

Python Packages

This tutorial will use the following python packages:

boto3, use this to create a client that interacts with the AWS and our S3 bucket.json, a standard package of python. This is a default package built into python, so there will be not installation required.pprint, use this to format some of the JSON responses received from AWS.botocore, this is a lower level interface to AWS services. It is primarily used for some error handling- pipenv, this will set up both a virtual environment and provide us a package manager.

Fortunately, we will only have to install boto3 and botocore packages with pipenv, which makes set up at this point pretty easy. Run the following command in the terminal to install pipenv on Ubuntu 20.04 LTS:

pip install pipenvRun the following command to install both boto3 and botocore packages using pipenv in the terminal:

pipenv install botocore boto3Next, run the following of code to start up the virtual environment:

pipenv shellNow that the environment is fully configured, move on to the actual python program.

create_s3_bucket

This function will only create new S3 buckets, if a bucket of the same name already exists then the function will fail and throw an error.

s3 buckets have a few conventions that are required for naming, so keep these in mind:

- the bucket name must be globally unique — no other bucket can have the same name as yours

- the bucket cannot have underscores in the name

- names can be between 3 and 63 characters long

If any of the above naming conventions are violated, or for any other reason the bucket cannot be created, an exception will be thrown and the program will exit.

def create_s3_bucket(s3, bucket_name, region):

'''

create a new s3 bucket if a bucket of the same name does not already exist.

s3 - the s3 client instantiated with the help of boto3

bucket_name - a string with the name of the bucket

region - the name of the region the s3 bucket should be created in (e.g. us-east-1)

'''

## attempt to create bucket

try:

location = {'LocationConstraint': region}

s3.create_bucket(Bucket=bucket_name, CreateBucketConfiguration=location)

except ClientError as e:

print("Error: \n\n", e)

return False

return True # return true if successful

s3_public_policy Function

This function will add the proper policy to the S3 bucket, which allows all objects stored in it to be publicly accessible.

Here is the policy itself:

{

"Version": "2012-10-17",

"Statement" : [

{

"Sid": "PublicReadGetObject",

"Effect" : "Allow",

"Principal": "*",

"Action": ["s3:GetObject"],

"Resource" : "arn:aws:s3:::<BUCKET-NAME>/*"

}

]

}

The policy allows objects to be publically accessible in the bucket. In the python program, <BUCKET-NAME> is automatically replaced with the provided bucket name. However, if the bucket was unable to be created in the first place, then this function will never run.

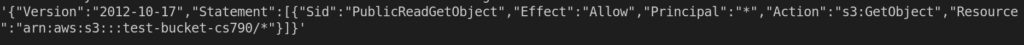

The function will print a JSON string with all policies applied to the bucket in the variable, result. Below is a sample result:

def s3_public_policy(s3, bucket_name):

'''

add a new policy to the s3 bucket, allowing the contents of the bucket to be publicly accessible.

this is a requirement which allows objects in the s3 bucket to be accessible to the internet.

the function will print the all policies applied to the s3 bucket after applying the new public policy.

s3 - the s3 client instantiated with the help of boto3

bucket_name - a string containing the name of the s3 bucket

'''

pub_policy_dict = {

"Version": "2012-10-17",

"Statement" : [

{

"Sid": "PublicReadGetObject",

"Effect" : "Allow",

"Principal": "*",

"Action": ["s3:GetObject"],

"Resource" : "arn:aws:s3:::{}/*".format(bucket_name)

}

]

}

# convert the policy dictionary object to JSON formatted string

bucket_policy = json.dumps(pub_policy_dict)

# add the policy to the s3 bucket

s3.put_bucket_policy(Bucket=bucket_name, policy=bucket_policy)

# get s3 bucket policy after adding new policy

result = s3.get_bucket_policy(Bucket=bucket_name)

pp.print(result["Policy"])

s3_website_config Function

The function will apply configurations to the S3 bucket that enable it to serve static webpages. The only required configuration is the IndexDocument. Configuring an error file is optional, as AWS will serve up a generic one when needed.

In this tutorial, we are only configuring a simple index.html file, which will be available in the zip file at the end of the tutorial to test with.

def s3_website_config(s3, bucket_name):

'''

configure the s3 bucket to act as a static website host.

after applying the configurations, a JSON formatting string will be printed to display all configurations related to

static website hosting on the s3 bucket.

s3 - the s3 client instantiated with the help of boto3

bucket_name - a string with the name of the bucket

'''

website_config = {"IndexDocument" : {"Suffix": "index.html"}}

s3.put_bucket_website(Bucket = bucket_name,

WebsiteConfiguration = website_config)

upload_file Function

upload_file will upload the index.html file to the S3 bucket. It is pretty simple to do; however, there is one small catch.

Notice the ExtraArgs dictionary? When uploading a file to AWS S3, the content type is read as a binary file. In order for S3 to recognize that the index.html file correctly, the program needs to specify that the file is a html text file. Without the information provided by extra_args, AWS will not be able serve up the webpage. Instead, it will attempt to download the webpage when navigating to the website.

def upload_file(s3, bucket_name, file_name):

'''

upload a file to s3 and customize the ContentType argument to text/html

without the custom ContentType argument, the file will be uploaded as a binary file.

AWS will not recognize that the index.html file is an actual html text file.

s3 - the s3 client instantiated with the help of boto3

bucket_name - a string containing the name of the s3 bucket

file_name - a string containing the name of the index.html file

'''

try:

extra_args = {'ContentType' : 'text/html'}

s3.upload_file(file_name, bucket_name, file_name, ExtraArgs=extra_args)

except ClientError as e:

print("Error: \n\n", e)

return False

return True # return true if successful

Code Usage and Final Outputs

Code Usage

To use the sample code from this tutorial, download and up-zip the file s3_upload below.

The un-zipped folder will contain index.html and s3_upload.py. At this point replace the provided index.html with the intended index.html if required. If the intended website includes multiple files, there will need to be code alterations to loop through each file, uploading each based on the file extension.

To run the code, enter the following line of code in the terminal:

python s3_upload.pyThe terminal will perform a short loop by prompting the user for the intended bucket name, region, and name of the file to be uploaded.

The code does have a default region that can be used, us-east-1. When prompted for the region, the user can hit enter without entering a region name.

Additionally, when the user is prompted for the file name, the intended usage is to enter the name of the file from the same directory as s3_upload.py. If this behavior needs to be altered, the user will need to alter the behavior of upload_file.

Final Output

The final output of the code will provide the URL to the website. The following URL is a skeleton of the website URL:

http://<BUCKET-NAME>.s3-website.<REGION>.amazonaws.comIn the above URL, substitute the following:

BUCKET-NAME– the name of the bucket provided to the program, e.g.cs790-testREGION– the region you provided, if none were provided, then useus-east-2

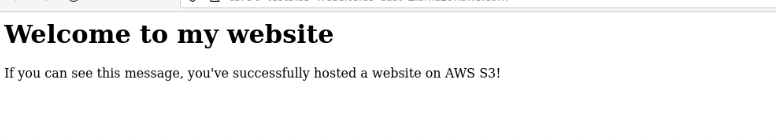

Here is a sample of what should be seen when navigating to the proper website.

Here is a link to download a zip file containing the python program s3_upload.py and sample index.html file:

References

botocore Documentation https://botocore.amazonaws.com/v1/documentation/api/latest/index.html

boto3 Documentation https://boto3.amazonaws.com/v1/documentation/api/latest/index.html

AWS s3 Tutorial https://docs.aws.amazon.com/AmazonS3/latest/userguide/HostingWebsiteOnS3Setup.html

pprint Documentation https://docs.python.org/3/library/pprint.html

JSON Documentation https://docs.python.org/3.8/library/json.html

AWS CLI Quick Setup Documentation https://docs.aws.amazon.com/cli/latest/userguide/getting-started-quickstart.html

pipenv Documentation https://pipenv-fork.readthedocs.io/en/latest/

AWS s3 Bucket Naming Conventions https://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-s3-bucket-naming-requirements.html