Amazon Simple Storage Service(Amazon S3) is an object storage service commonly used for data analytics applications, machine learning, websites, and many more. To start programmatically working with Amazon S3, you must have an AWS account and install the AWS Software Development Kit (SDK) with the settled-up AWS credentials. This article will cover the AWS SDK for Python called Boto3.

Boto3 is the Python SDK for Amazon Web Services (AWS) that allows you to manage AWS services in a programmatic way from your applications and services. You can do the same things that you’re doing in your AWS Console and even more, but faster, repeated, and automated with python code. Using the Boto3 library with Amazon Simple Storage Service (S3), an object storage service, allows you to easily create, update, and delete S3 Buckets, Objects, S3 Bucket policies from Python programs or scripts.

AWS’ Boto3 library is used commonly to integrate Python applications with various AWS services. The two most commonly used features of boto3 are Clients and Resources.

Resources are a higher-level abstraction compared to clients. They are generated from a JSON resource description that is present in the boto library itself.

Resources provide an object-oriented interface for interacting with various AWS services

Here is the link on how to create S3 Buckets.

Prerequisites:

In general, here’s what you need to have installed:

- Python 3

- Boto3

- AWS S3 bucket

Install Boto3 dependencies:

Python comes by default in Ubuntu Server, so you do not need to install it.

To check the Python version on your system, use the following command.

which python

/usr/bin/python --versionnor

python --versionIf you do not have pip and you are using Ubuntu, execute the following command to first update the local repo.

sudo apt updateTo install pip, use the following command.

sudo apt install python-pipTo check the version of Pip installed, execute the following command.

python --versionOnce you have python and pip, you can install Boto3.

Installing Boto3 is very simple and straight. To install Boto3 use the following command.

pip install boto3To check if the Boto3 is installed and its version, execute the following command.

pip show boto3How to connect to S3 using Boto3?

The Boto3 library provides you with two ways to access APIs for managing AWS services:

- The

clientthat allows you to access the low-level API data. For example, you can access API response data in JSON format. - The resource that allows you to use AWS services in a higher-level object-oriented way.

Here’s how you can instantiate the Boto3 client to start working with Amazon S3 APIs:

Connecting to Amazon S3 API using Boto3:

import boto3

AWS_REGION = "us-east-1"

client = boto3.client("s3", region_name=AWS_REGION)

Here’s an example of using Boto3.resource method:

import boto3

resource = boto3.resource('s3')From here, I will demonstrate Boto3 resources.

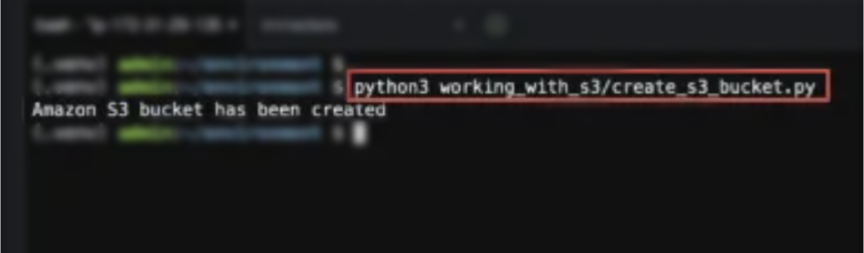

Creating S3 Bucket using Boto3 resource:

To avoid various exceptions while working with the Amazon S3 service, we strongly recommend you define a specific AWS Region from its default region for both the client, which is the Boto3 client, and the S3 Bucket Configuration:

You can use the Boto3 resource to create an Amazon S3 bucket:

import boto3

AWS_REGION ="us-east-2"

resource = boto3.resource("s3", region_name=AWS_REGION)

bucket_name="Liki-demo-bucket"

location = {'LocationConstraint': AWS_REGION}

bucket = resource.create_bucket(

Bucket=bucket_name,

CreateBucketConfiguration=location)

print("Amazon S3 bucket has been created")Here’s an example output:

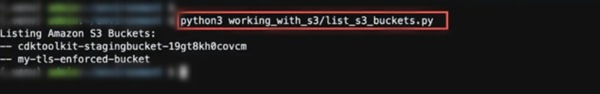

Listing S3 Buckets using Boto3 resource:

There are two ways of listing all the buckets of the Amazon S3 Buckets:

- list_buckets() method of the client resource

- all() of the S3 buckets resource

import boto3

AWS_REGION = "us-east-2"

resource = boto3.resource("s3", region_name=AWS_REGION)

iterator = resource.buckets.all()

print("Listing Amazon S3 Buckets:")

for buckets in iterator:

print(f"--{bucket.name}")Here’s an example output:

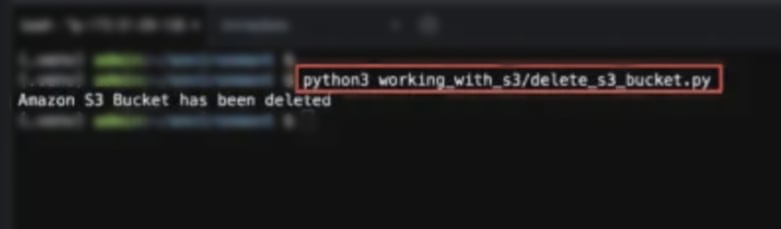

Deleting S3 Buckets using Boto3 resource:

There are two possible ways of deletingAmazon S3 Bucket using the Boto3 library:

- delete _bucket() method of the S3 client

- delete() method of the s3 bucket resource

import boto3

AWS_REGION ="us-east-2"

resource = boto3.resource("s3", region_name=AWS_REGION)

bucket_name = "Liki-demo-bucket"

s3_bucket = resource.Bucket(bucket_name)

s3_bucket.delete()

print("Amazon S3 Bucket has been deleted")Here’s an example output:

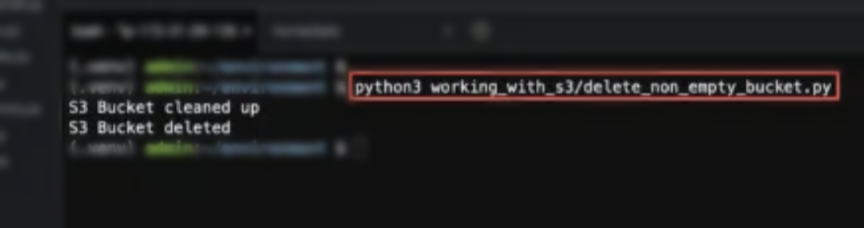

Deleting non-empty S3 Bucket using Boto3:

To delete an S3 Bucket using the Boto3 library, you must clean up the S3 Bucket. Otherwise, the Boto3 library will raise the BucketNotEmpty exception to the specified bucket, which is a non empty bucket. The cleanup operation requires deleting all existing versioned bucket like S3 Bucket objects and their versions:

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "Liki-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_bucket = s3_resource.Bucket(S3_BUCKET_NAME)

def cleanup_s3_bucket():

#Deleting Objects

for s3_object in s3_bucket.objects.all():

s3_object.delete()

#Deleteing objects versions if S3 versioning enabled

for s3_object_ver in s3_bucket.object_versions.all():

s3_object_ver.delete()

print("S3 Bucket cleaned up")

cleanup_s3_bucket()

s3_bucket.delete()

print("S3 Bucket deleted")

Here’s an example output:

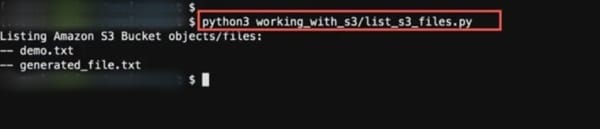

List of files from S3 Bucket:

The most convenient method to get a list of files with its key name from S3 Bucket using Boto3 is to use the S3Bucket.objects.all() method:

import io

import boto3

AWS_REGION = "us-east-2"

S3_BUCKET_NAME = "Liki-demo-bucket"

s3_resource = boto3.resource("s3", region_name=AWS_REGION)

s3_bucket = s3_resource.Bucket(S3_BUCKET_NAME)

print('Listing Amazon S3 Bucket objects/files:')

for obj in s3_bucket.objects.all()

print(f'--{obj.key}')Here’s an example output: