This is step 5 of 6 of Hands-On Project 2 for Amazon Web Services. In this step, you first install client software on your cloud PC that is needed to access AWS services from a Python program. Then, you create one simple Python program which accesses your cloud storage using the Python SDK.

Note that once you have completed this basic exercise, the use of the Python SDK is completely open to you. That is, you can access pretty much any AWS resource, and even go as far as programmatically provisioning and deleting resources. This offers you the full power of the AWS Management Console from within a Python program that runs either on a cloud server or from a remote location.

To access AWS resources from a Python program, you need 4 things:

- A Python development environment. You completed this in step 4 of this project.

- The AWS Command Line Interface (CLI).

- The Python Software Development Kit (SDK) for Azure.

- A Python program to do the work.

Since we already have Python and VS Code installed, let’s start with the AWS Command Line Interface.

Install the AWS Command Line Interface (CLI)

Under Ubuntu, the AWS Command Line Interface is available from the standard APT repositories. Install it in the usual fashion with the following commands. You can do this from within VS Code or not – it will work either way:

sudo apt update

sudo apt install awscliVerify the installation with the following:

aws --versionIf you get version information back, you are good to go.

The aws command is the entry point to the AWS CLI, and there are many subcommands, each dealing with a different type of resource. You will learn more of these subcommands as you work more with the CLI.

With the CLI installed and working, we can turn our attention to the Python SDK.

Create a Project and Install the Python SDK Package

For the AWS Python SDK, Amazon has made it as easy as possible for the user by putting the entire SDK into one Python package named boto3. So, you need to install only boto3 to get the whole Python SDK.

The boto3 API documentation starts here:

https://boto3.amazonaws.com/v1/documentation/api/latest/index.html

To set up for the programs below, create a new Python virtual environment and VS Code project, and install the packages we will be using:

cd ~

mkdir awspy

cd awspy

code .Open a terminal window and install the boto3 package:

pipenv install boto3After installing the SDK package, select the Python interpreter for your new virtual environment as you did in previous project steps.

Configure AWS CLI To Use Your Account Information

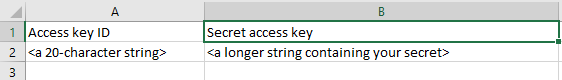

To authenticate your Python program, AWS obtains your account credentials from a file that you will configure on your computer’s disk. The file it uses contains your Access Key Id and your Secret Access Key. You should have downloaded these two things in a CSV file at the time you created your EC2 Instance. As a reminder, here is what the Access Key Id and Secret look like if you open the CSV file with Excel:

To proceed with this project, you will need to locate the CSV file and be ready to paste its pieces into a command window. (Maybe the CSV file is in your Downloads folder?)

Armed with the above information, configure your cloud PC for AWS CLI access as below. From a terminal window (inside or outside of VS Code):

aws configureAt the prompts, paste in 1) your Access Key Id, and 2) your Secret access key, then enter 3) a default region and 4) a default output format for your AWS CLI command outputs.

The default region is the region name that will be used for commands that need a region when you don’t specify a region in the command. As an example, if most of your resources are in Ohio, enter us-east-2 for your default region. You can get a list of region names in the upper right corner of your Management Console page.

For default output format there are several choices, but please choose json.

When the aws configure command is complete, your credentials are stored in two files on your VM’s file system. Please note that your credentials are stored in plain text, so it will be important to protect the computer that has this stored information. In our case, we are storing the credentials on a cloud PC, so someone would need SSH or RDP access to your server in order to steal your account key.

Example S3 Bucket

For the following example, I have two S3 buckets named cloudblog.mdenzien.com and msd-demo. To get ready for the exercise, make sure you have at least one S3 bucket in your AWS account. You will need to substitute your own names into the code shown below.

Write a Python Program to Access Your S3 Buckets

Create and save a Python program as follows:

import boto3

def list_buckets():

s3_client = boto3.client('s3')

response = s3_client.list_buckets()

print("These are buckets accessible by your credentials")

for bucket in response["Buckets"]:

print(f' {bucket["Name"]}')

list_buckets()

The program defines one function that obtains an S3 client object, uses the client to list the buckets that this user has access to, and prints the bucket names.

There are a couple of things to notice about this program:

- There is no code in the program related to authentication. All of the information needed to authenticate is provided outside of you program. In this case, the AWS SDK is accessing the account credentials that you entered using the aws configure command. The buckets that are listed are the ones that that account has access to.

- Python is very good at hiding implementation details from sight so that the code can be more succinct. By looking at this code, the response object and its pieces are seem to be accessed as if response is a Dictionary with other Dictionaries inside of it.

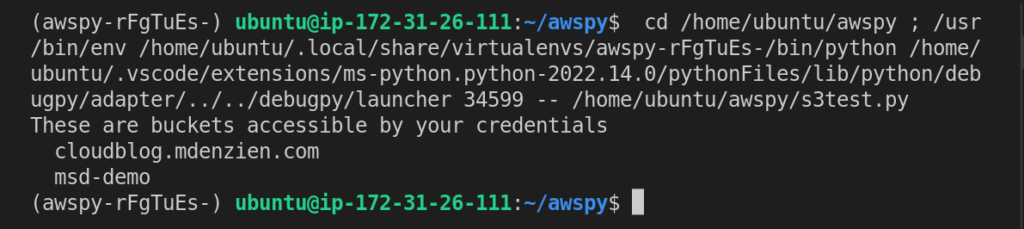

Press F5 or select Run | Start Debugging to run the program. The output will look like this:

Let’s test our theory about response being a dictionary by printing it out after we get our response. Add a print statement to the program like this, then rerun the program:

import boto3

def list_buckets():

s3_client = boto3.client('s3')

response = s3_client.list_buckets()

print(response)

print("These are buckets accessible by your credentials")

for bucket in response["Buckets"]:

print(f' {bucket["Name"]}')

list_buckets()

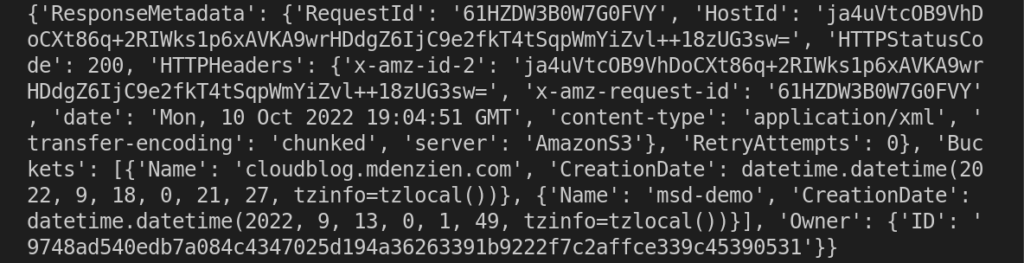

The output is quite messy again. Here is the middle of the output, which contains the output of the print statement:

This is recognizable as valid JSON. Buried in the middle of this JSON document is a JSON object named Buckets, containing the Name and CreationDate of the two buckets my account has access to.

This is a valid technique in Python for looking in more detail at what exactly is going on in your program. You can also print out type information for objects in your program, and use the type information to reference the SDK documentation.