Serverless functions allow code to be delivered without you designating any infrastructure for the code to be hosted on. AWS Lambda is a FaaS (Function as a Service) platform that allows you to construct serverless functions. Go, Java, Ruby, Python2 and Python3 are just a few of the popular programming languages that AWS Lambda supports. In this blog, we will be using Python3. Even though they are called “serverless”, they actually run inside a variety of runtime environments on cloud server instances.

Requirements:

- You must have an Amazon Web Services account.

Using Lambda with AWS S3 Buckets:

Suppose you are receiving XML data from four different climate seasons straight into an AWS S3 bucket. You want to sort the XML files into four separate folders based on which season the data comes from. The only way to know the data source is to look inside the XML files, which look like this:

<data>

<data-source> Summer </data-source>

<data-content>June, July, August</data-content>

</data>How would you automate this process? In this situation, AWS Lambda would be useful. Let’s look at how to do this.

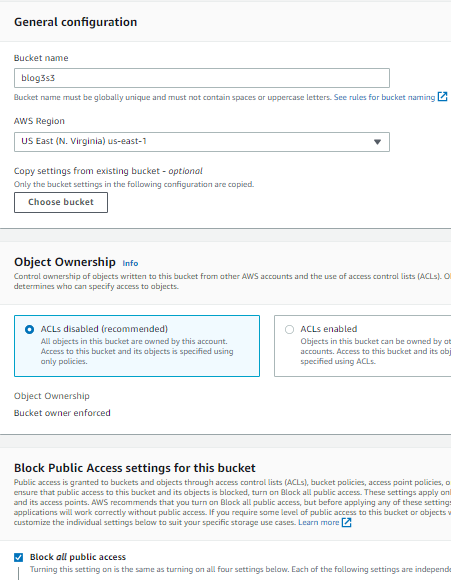

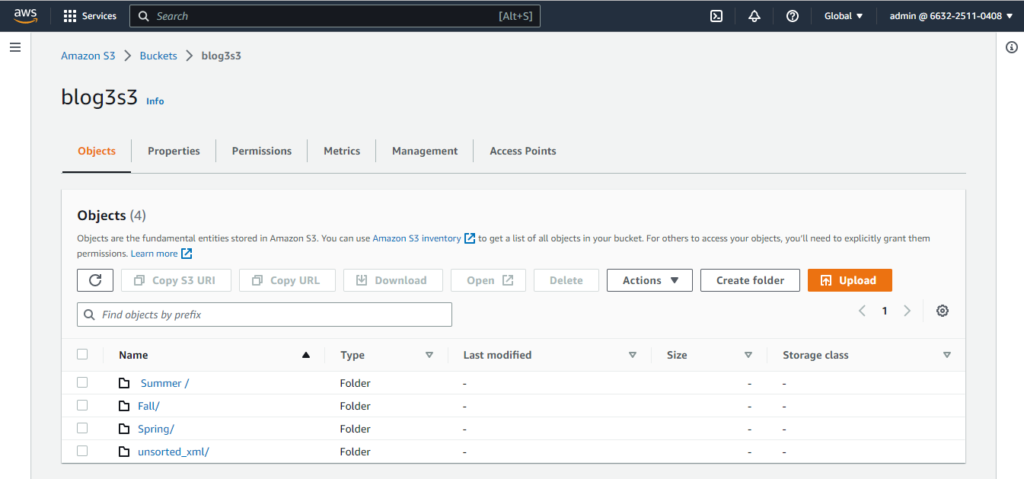

1.Creating an S3 bucket:

Create an S3 bucket. Go to the S3 page from your AWS console and click on the “Create bucket” button. Make sure you leave the “Block all public access” checkbox ticked and click on “Create bucket”.

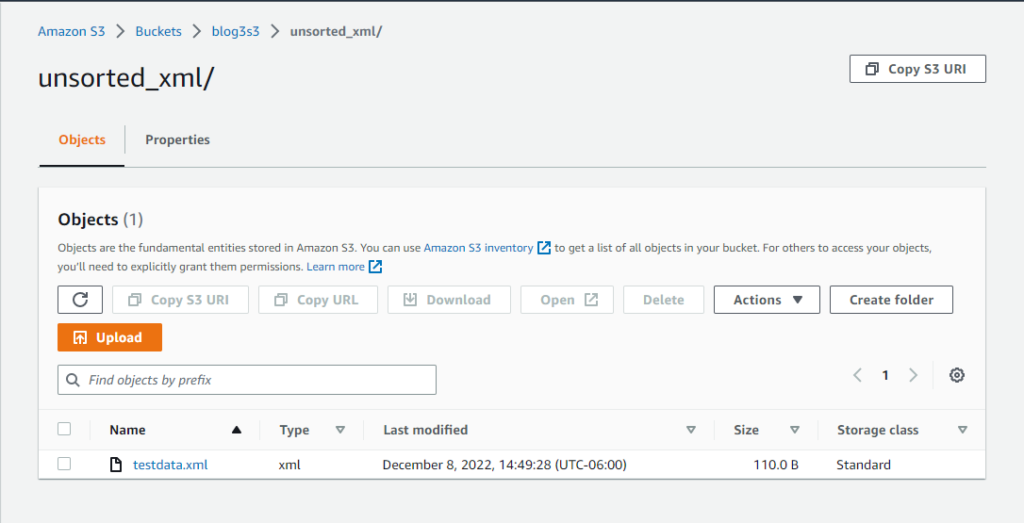

Now, add a folder called “unsorted” where all the XML files will be stored initially. Create a .xml file named “testdata.xml” and upload it to the unsorted folder:

<data>

<data-source> Summer </data-source>

<data-content>June, July, August</data-content>

</data>

2.Create a Lambda function:

From the Services tab on the AWS console, click on “Lambda”. From the left pane on the Lambda page, select “Functions” and then “Create Functions”.

- Select “Author from scratch” and give the function a suitable name.

- Choose Python 3.8 as runtime language.

- Click on Create function. From the list of Lambda functions on the “Functions” page, select the function you just created, and you will be taken to the function’s page.

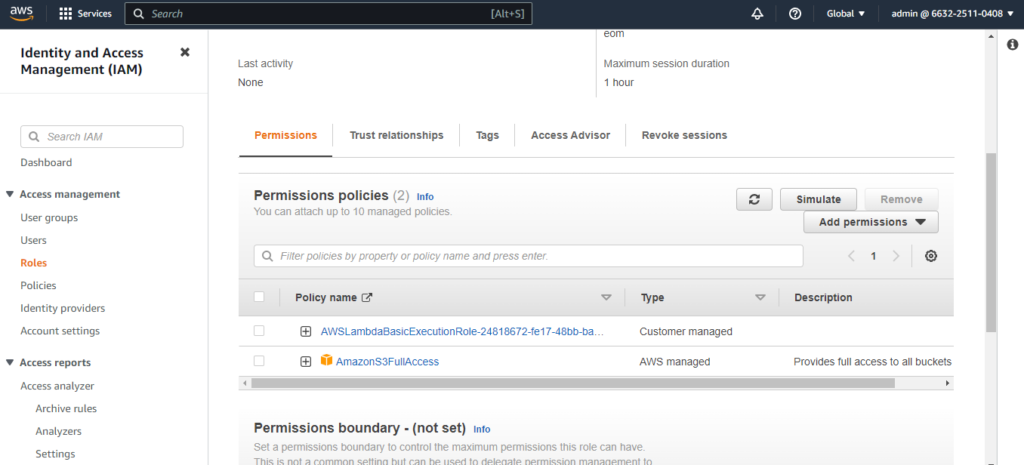

Lambda automatically creates an IAM role for you to use with the Lambda function. IAM role can found under the permissions tab on the function’s page. Add the permission to access/manage the AWS services you connect to from inside your function. Here I have added S3 permissions to the IAM role list:

3.Adding a trigger for our Lambda function:

We want the Lambda function to be invoked every time an XML file is uploaded to the “unsorted” folder. To do this, we will use an S3 bucket PUT event as a trigger for our function.

In Lambda function’s page, click on the “Add trigger” button.

- Select the “S3” trigger and the bucket you just created. Select “PUT” event type. Set the prefix and suffix as “unsorted/” and “.xml” respectively. Finally, click on “Add”.

4.Adding code to our Lambda function:

There are 3 ways you can add code to your Lambda function:

- Through the code editor available on the console.

- By uploading a .zip file containing all your code and dependencies.

- By uploading code from an S3 bucket.

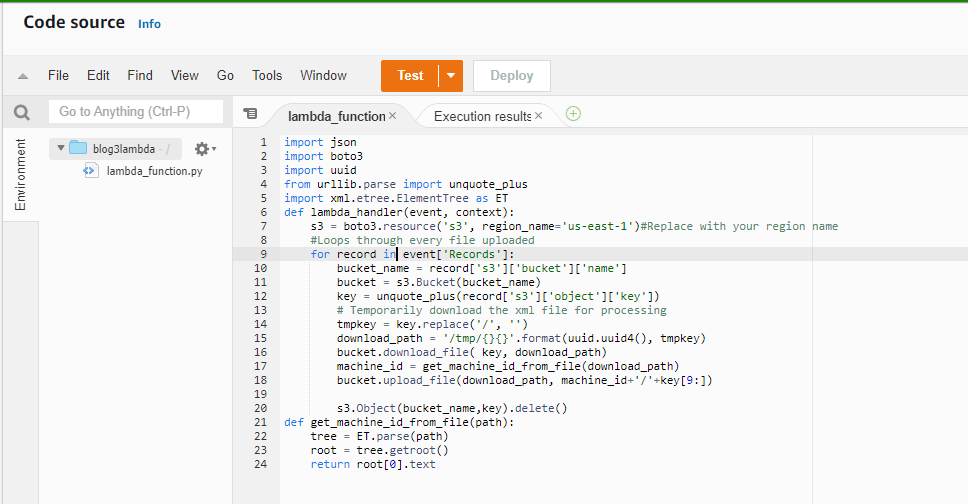

Her I’m following the first way. On the function page, go down to the “code” section to find the code editor. Copy and paste the following code:

import json

import boto3

import uuid

from urllib.parse import unquote_plus

import xml.etree.ElementTree as ET

def lambda_handler(event, context):

s3 = boto3.resource('s3', region_name='')#Replace with your region name

for record in event['Records']:

bucket_name = record['s3']['bucket']['name']

bucket = s3.Bucket(bucket_name)

key = unquote_plus(record['s3']['object']['key'])

# Temporarily download the xml file for processing

tmpkey = key.replace('/', '')

download_path = '/tmp/{}{}'.format(uuid.uuid4(), tmpkey)

bucket.download_file( key, download_path)

machine_id = get_machine_id_from_file(download_path)

bucket.upload_file(download_path, machine_id+'/'+key[9:])

s3.Object(bucket_name,key).delete()

def get_machine_id_from_file(path):

tree = ET.parse(path)

root = tree.getroot()

return root[0].text

Don’t forget to replace the region name

The code above is simple to understand. It does the following:

- Get info from event object.

- Download the XML file that caused the Lambda function to be invoked.

- Process the XML file to find the machine_id from the first line of the XML file.

- Upload the file back to the S3 bucket, but inside a folder named the value of machine_id.

- Delete the original file.

Now press the “Deploy” button and our function should be ready to run.

5.Testing the Lambda function:

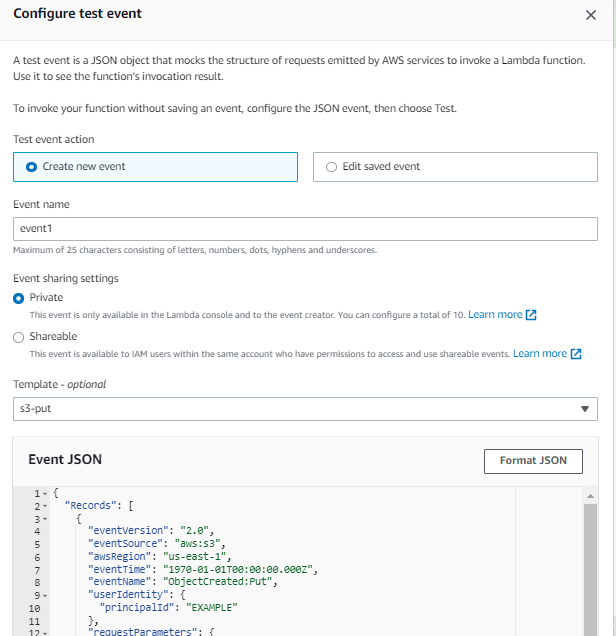

Using the Lambda interface, AWS has made it rather simple to test Lambda functions. You may replicate the execution of your Lambda function using the Test feature on the Lambda console, regardless of the trigger your Lambda function employs. All this needs is stating what event object will be sent into the method. To help you achieve this, Lambda provides JSON templates appropriate to each sort of trigger. The template for an S3 PUT event, for instance, looks like this:

{

"Records": [

{

"eventVersion": "2.0",

"eventSource": "aws:s3",

"awsRegion": "us-east-1",

"eventTime": "1970-01-01T00:00:00.000Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "EXAMPLE"

},

"requestParameters": {

"sourceIPAddress": "127.0.0.1"

},

"responseElements": {

"x-amz-request-id": "EXAMPLE123456789",

"x-amz-id-2": "EXAMPLE123/5678abcdefghijklambdaisawesome/mnopqrstuvwxyzABCDEFGH"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "testConfigRule",

"bucket": {

"name": "blog3s3",

"ownerIdentity": {

"principalId": "EXAMPLE"

},

"arn": "arn:aws:s3:::blog3s3"

},

"object": {

"key": "unsorted_xml/testdata.xml",

"size": 110.0,

"eTag": "7a0961300488898142f8ff09d0cd473f",

"sequencer": "0A1B2C3D4E5F678901"

}

}

}

]

}

To test the Lambda function just created, you need to configure a test event for your function. To do this, click on the “Select a test event” dropdown right above the Lambda code editor and click on “Configure test event”.

In the configure test event, make sure the “Create new test event” radio button is selected and select the “Amazon S3 Put” event template. We have to be careful about the data that is used in our Lambda function, which is the bucket name and the object key. Edit those two values appropriate to the S3 bucket and the XML file you created earlier. Finally give the test event a name and click on “Create”.

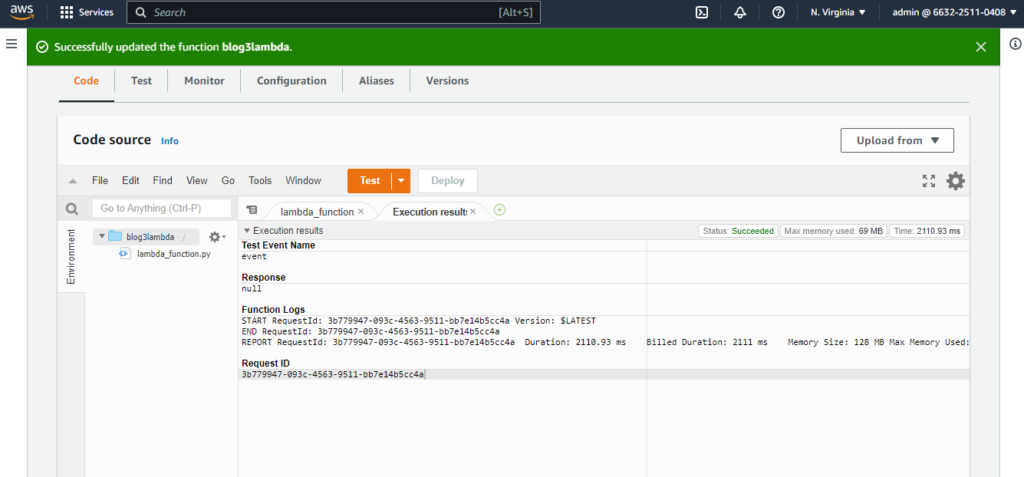

Now that we have a test event for your Lambda function, all you have to do is click on the “Test” button on top of the code editor. The console will tell you if the function code was executed without any errors.

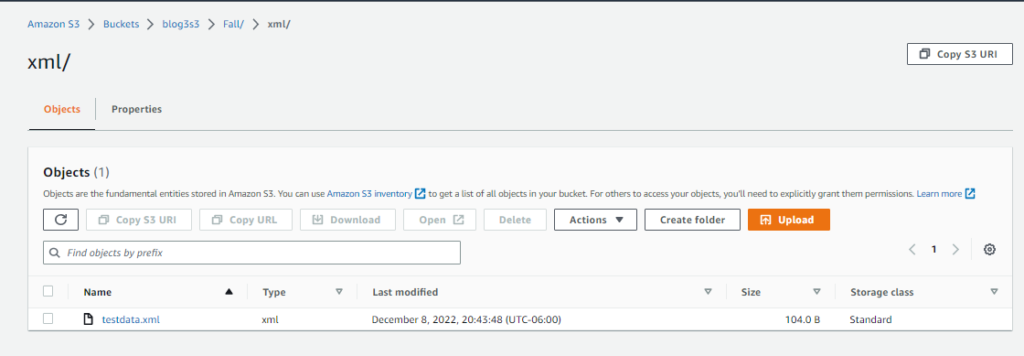

To check if everything worked go to your S3 bucket to see if the XML file has been moved to a automatically created “fall/” folder as shown below:

Now the Lambda function that we created can sort the files in unsorted folder.