We will be learning on how to read data from text file stored in S3 bucket with the help of lambda function and then we will store those values in our DynamoDB. Before starting with the process I will be telling you overview of S3 bucket, DynamoDB and lambda function.

1. S3 bucket -> It is basically a public cloud storage resource offered by AWS. Each object that is store by bucket has 3 components: the metadata of the object, content of the object and unique identifier of the object. We can store store data which can be useful for backups, disaster recovery, data archives, etc. We can interact with this bucket via AWS Command Line Interface, AWS Management Console and even APIs(Application Program Interface).

2. DynamoDB -> It is a NoSQL database service that is offered by AWS to create database tables to store and retrieve large amount of data. It follows a key-value store structure and relieves the customers of the responsibility of running and scaling a distributed database. As a result, it is an excellent choice for mobile, gaming, IoT, and other high-growth, high-volume applications.

3. Lambda function -> It is a compute service offered by AWS that allows us to run code without having to provision or manage servers. It executes our code only when necessary and automatically scales from a few requests per day to thousands per second. We can write our lambda function in several languages like Python, NodeJS, .NET, GO, Ruby and Java .Developers can use AWS Lambda services by uploading the code or directly coding in the Lambda’s code editor and mentioning the conditions that can trigger the code. A lambda function is a code that runs in the lambda run time environment. With this, any event can trigger our function, without developers having to worry about managing the server or locating the appropriate application or resource. This means that businesses can save the cost when their code is not running.

Now we will start with our task of writing a lambda function that can read a text file and store its data in a DynamoDB table.

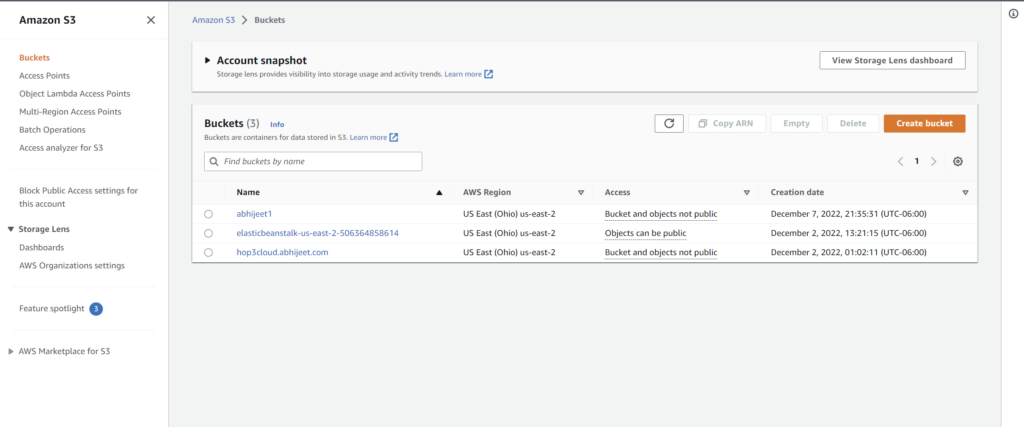

Step-1 -> Initially, create a S3 bucket and insert the data file that is given in the end of this blog. To create S3 bucket, go to S3 in AWS Console, click Create bucket, enter your bucket name and select your AWS region and then create the bucket. After bucket creation, go inside that bucket and upload the text file.

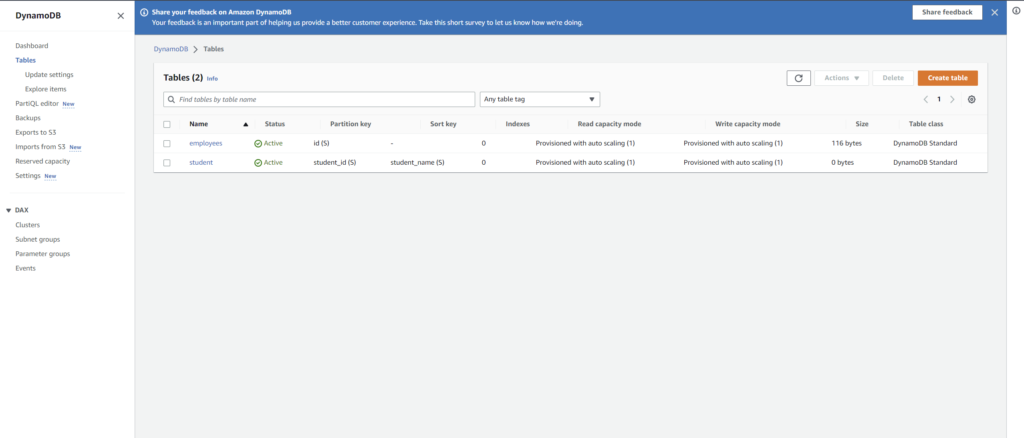

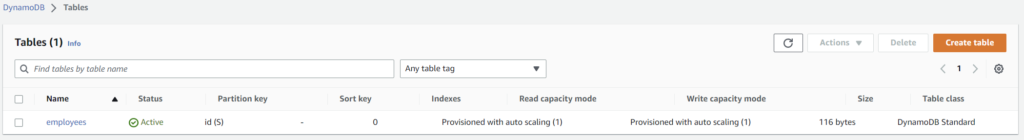

Step -2 -> Go to DynamoDB in AWS console to create a new table. Enter table name as “employees” and partition key as “id” Then press click to create the new table. Your DynamoDB console will look like this after the creation.

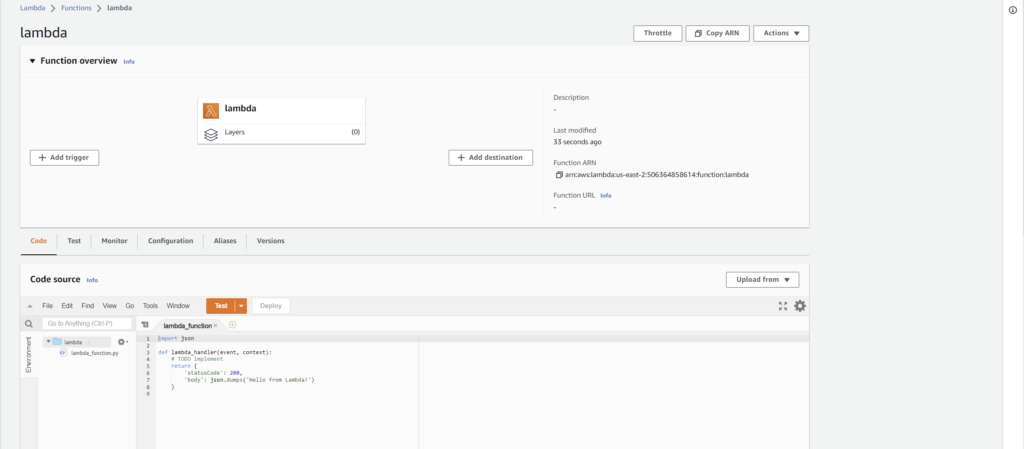

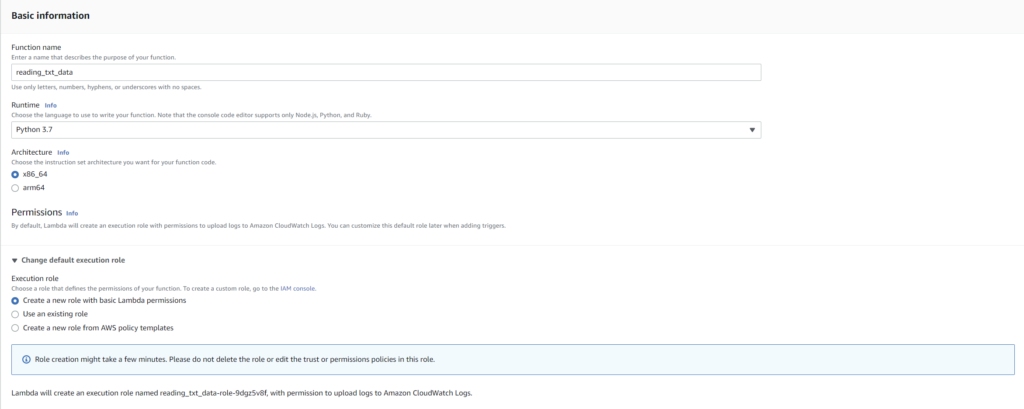

Step-3 -> Now, we will create a lambda function. Go back to console and choose service “Lambda”. After clicking, create a new function. Select “Author from scratch” and enter function name as “reading_txt_data”. Select Runtime as “Python 3.7”. Click “Change default execution role” and select “create a new role with basic Lambda permissions” from Execution role.

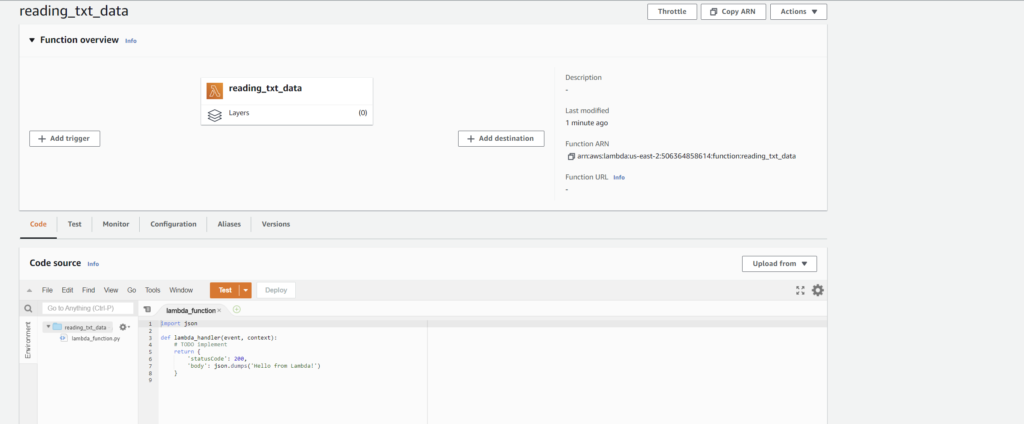

In the end, click Create function and your lambda function will be created.

Step-4 -> Now, enter this code in your newly created lambda_function.py file.

import boto3

s3_client = boto3.client("s3")

def lambda_handler(event, context):

bucket_name = event['Records'][0]['s3']['bucket']['name']

s3_file_name = event['Records'][0]['s3']['object']['key']

resp = s3_client.get_object(Bucket=bucket_name,Key=s3_file_name)

data = resp['Body'].read().decode("utf-8")

print(data)

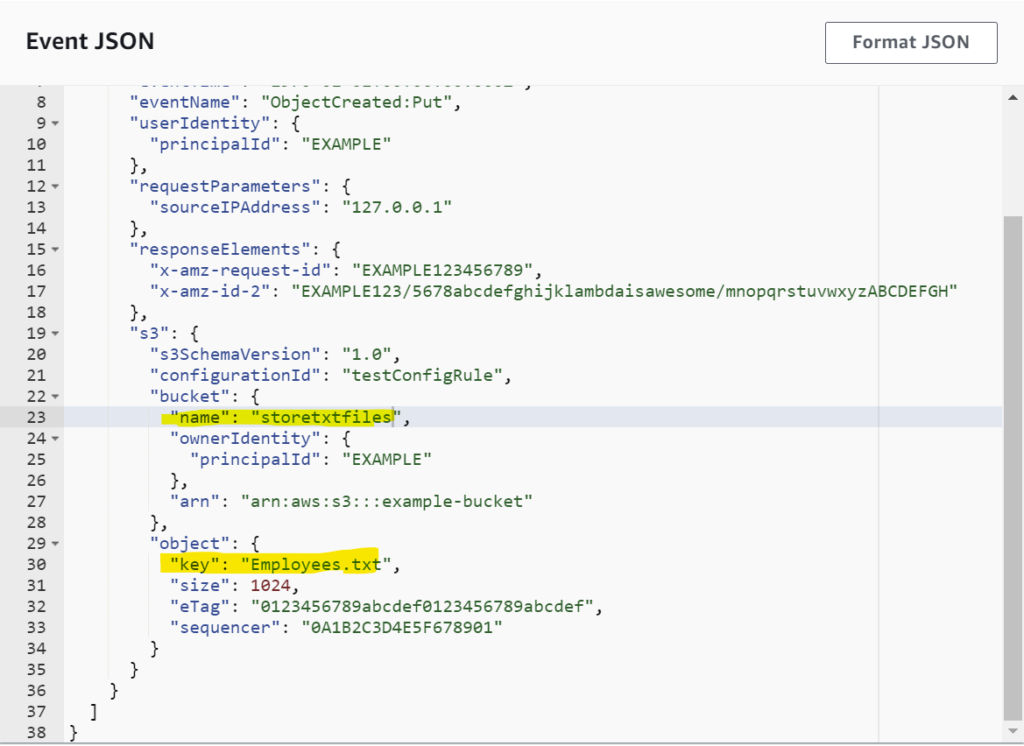

We will test this code. Click Test and configure test event. Enter your event name(it can be anything) and select Template as s3-put. Then modify the Event JSON with your bucket and file name and save it.

Step-5 -> Before deploying the code, we need to give S3 permissions so that our lambda can access our S3 bucket. For that, go to Configuration -> Permissions > Click Role name. It will take you to a new screen, in that select Permissions -> Add permissions -> Create inline policy. You will get 3 options: Service select as “S3”, Actions as “All S3 actions”, Resources as “All Resources”. Click review policy, enter your policy name. Create police. Now we have permissions to read, write data from our files stored in S3 bucket.

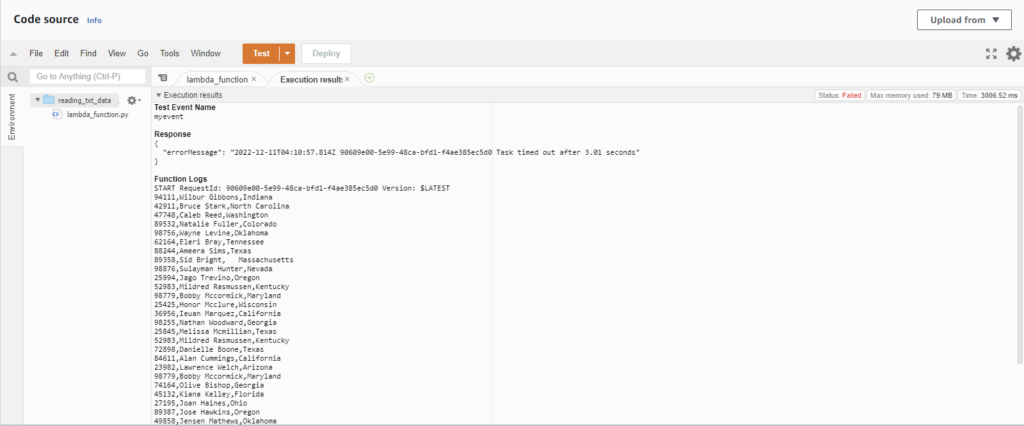

Step-6 -> Go back to your lambda code and deploy it. Now test it, you will see the output showing your text file data in Function Logs and Response being null. This means we are able to access the data from our text file stored in S3 bucket.

Step 7 -> Now we want this data to get stored in our DynamoDB table, “employees”. For that, we will update our code that will access individual column one by one from text file and store the complete data in the table.

import boto3

s3_client = boto3.client("s3")

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('employees')

def lambda_handler(event, context):

bucket_name = event['Records'][0]['s3']['bucket']['name']

s3_file_name = event['Records'][0]['s3']['object']['key']

resp = s3_client.get_object(Bucket=bucket_name,Key=s3_file_name)

data = resp['Body'].read().decode("utf-8")

employees = data.split("\n")

for emp in employees:

print(emp)

emp_data = emp.split(",")

# Add it to dynamodb

try:

table.put_item(

Item = {

"id": emp_data[0],

"name": emp_data[1],

"location": emp_data[2]

}

)

except Exception as e:

print("End of file")

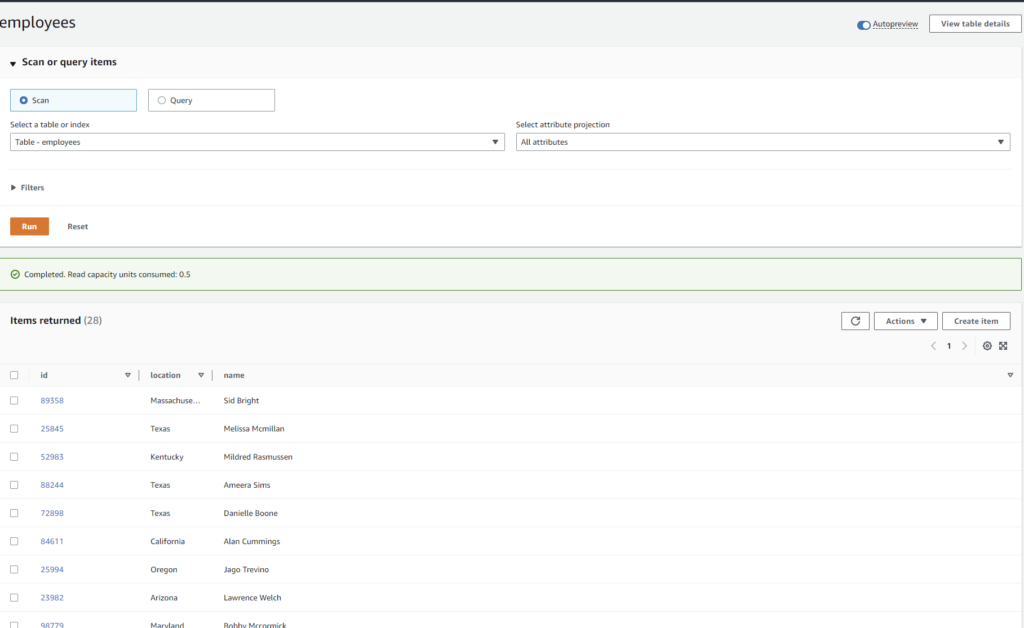

After writing this lambda function deploy it, you will see the same output screen. To see whether data was added in table or not, go to your table in DynamoDB and click “Explore table items”. You can see data being added in the table.

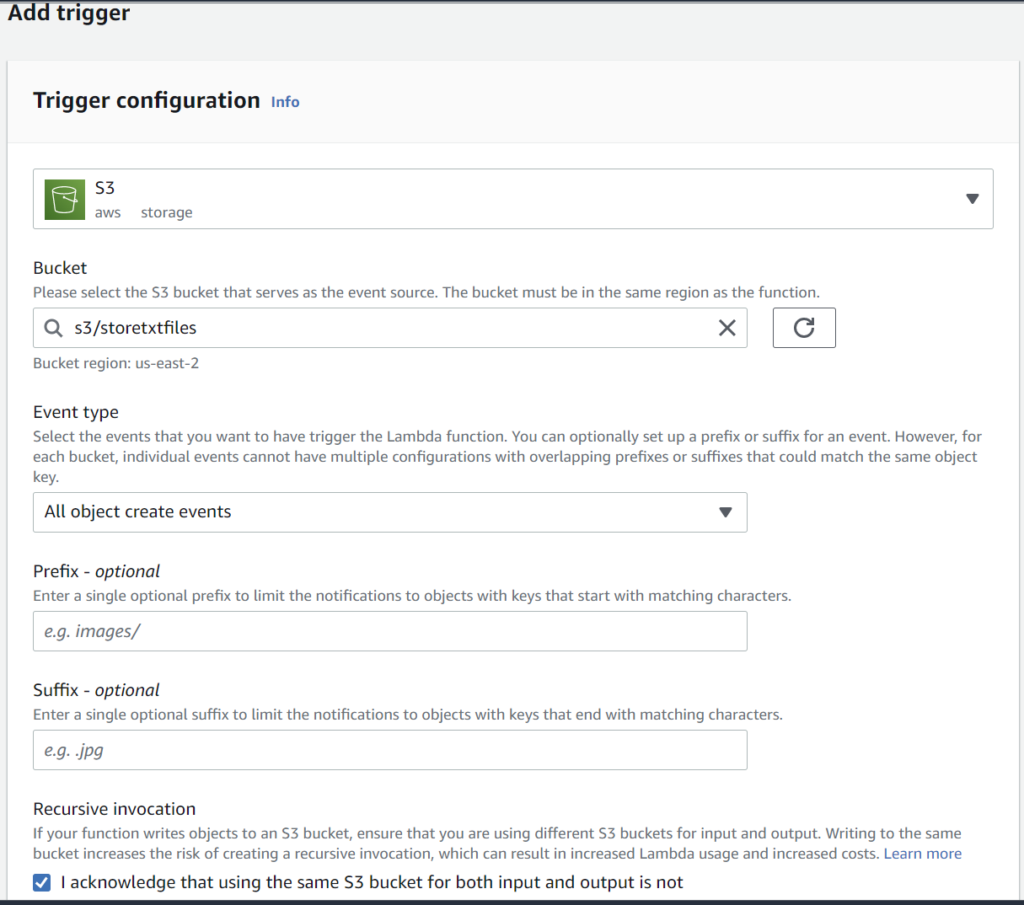

Step-8 -> Now, we have to automate this, what it means is that whenever we add updated text file in our S3 storage our data will automatically get updated in our table without executing any lambda function. To do this, add trigger from our lambda function. Select Source as S3, bucket as the bucket where our text file is stored, leave other things as default and click Add.

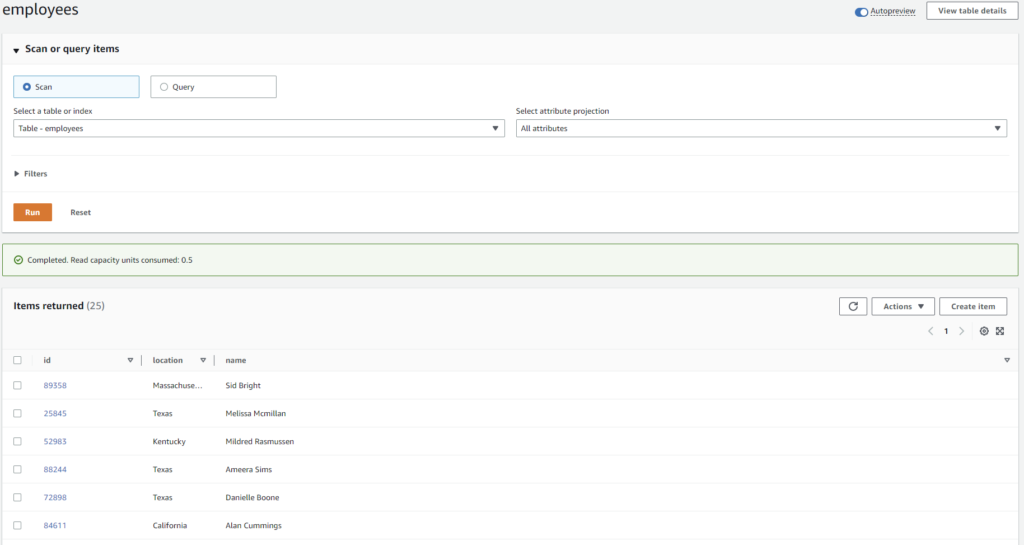

This will ensure that data will be updated in the table when we add updated version of our text file. Now, you can edit some data by adding or removing some rows and upload in your S3 bucket. I have edited the data by removing 3 rows. As you see in the below picture, only 25 rows are being displayed now in our table.

Thank you for reading my blog and here I am attaching the text file and code for lambda function in a zip file.

Tags: S3 Bucket, DynamoDB, Lambda