Overview

There are 5 steps to fulfil the exercise

- Create an S3 bucket.

- Create a Lambda function and add an s3 trigger to it.

- Update the S3 event notifications.

- Creating IAM roles to access and alter S3 bucket objects using lambda.

- Implement the logic to segregate files in the s3 bucket into their respective folders.

- Validate the results.

What is this exercise about?

This exercise is about creating a lambda and writing a python logic such that whenever a file is uploaded into an s3 bucket the lambda gets triggered automatically and the file is segregated to the respective folder.

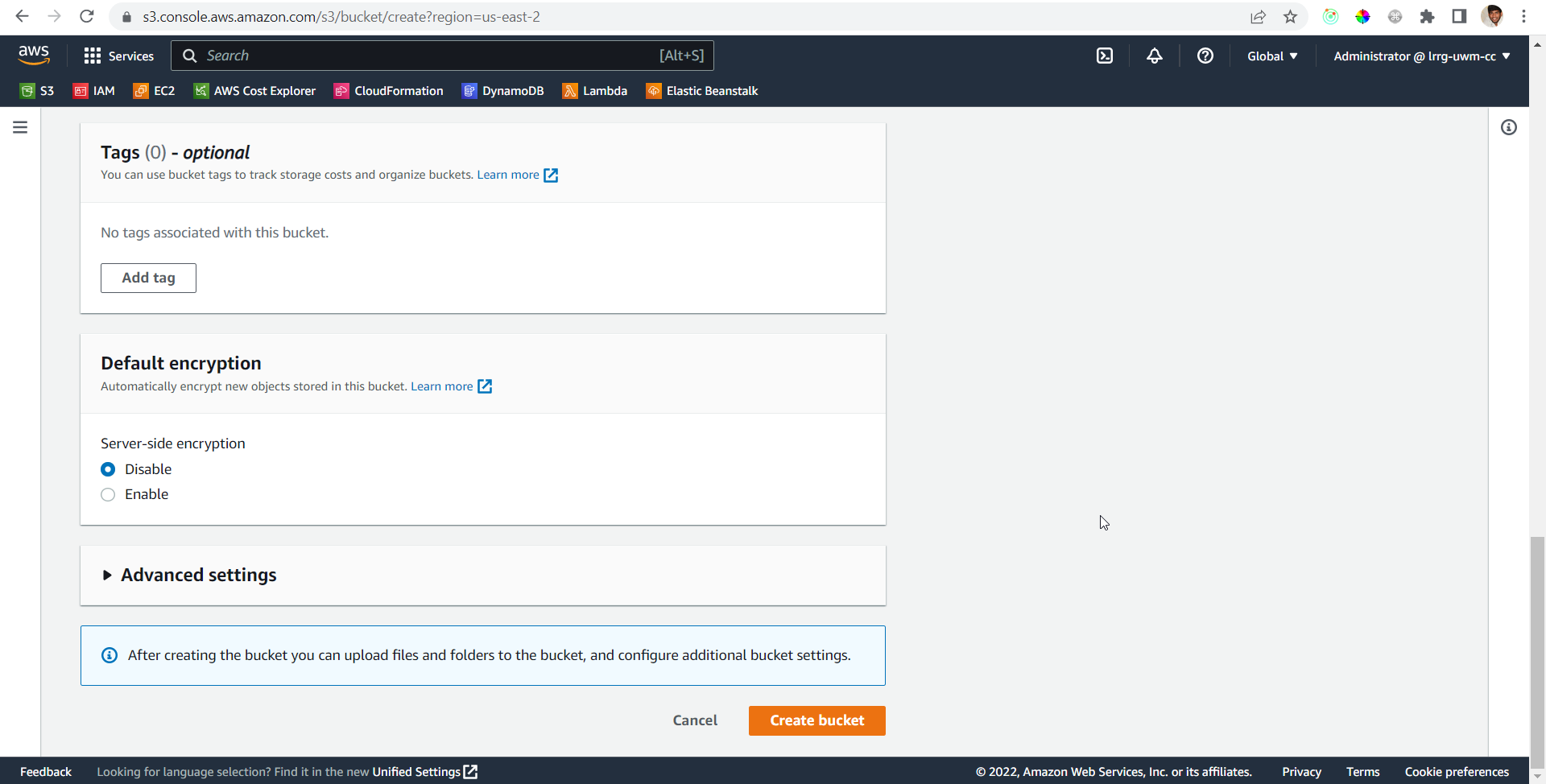

Create an S3 bucket

This is step 1 of 6 in this exercise. In this step, you create a Simple Storage Service (s3) bucket that you can use as cloud-based personal storage and create folders in it. S3 is a general-purpose storage service within AWS. It has many features. Here is a three-minute introduction:

- From the AWS Management Console, please do the following:

- Navigate: Console Home –> S3 –> Create Bucket.

- Enter a bucket name. Bucket names must be unique across all AWS accounts in all the AWS Regions within a partition. A partition is a grouping of regions. Because of this uniqueness requirement, it is a good idea to use “domain name-like” names for your buckets. For example. if I create a bucket to stage files for this blog site, I might name it blog-3.lrrg.com. This is very likely to be unique.

- Select Region. To maintain the locality of reference of all your AWS resources, put it in the same region you are using for your other AWS resources.

- Take the defaults for everything else.

- Scroll to the bottom –> Create Bucket.

- Create 3 folders inside the bucket. Suggested names Pictures, documents, miscellaneous

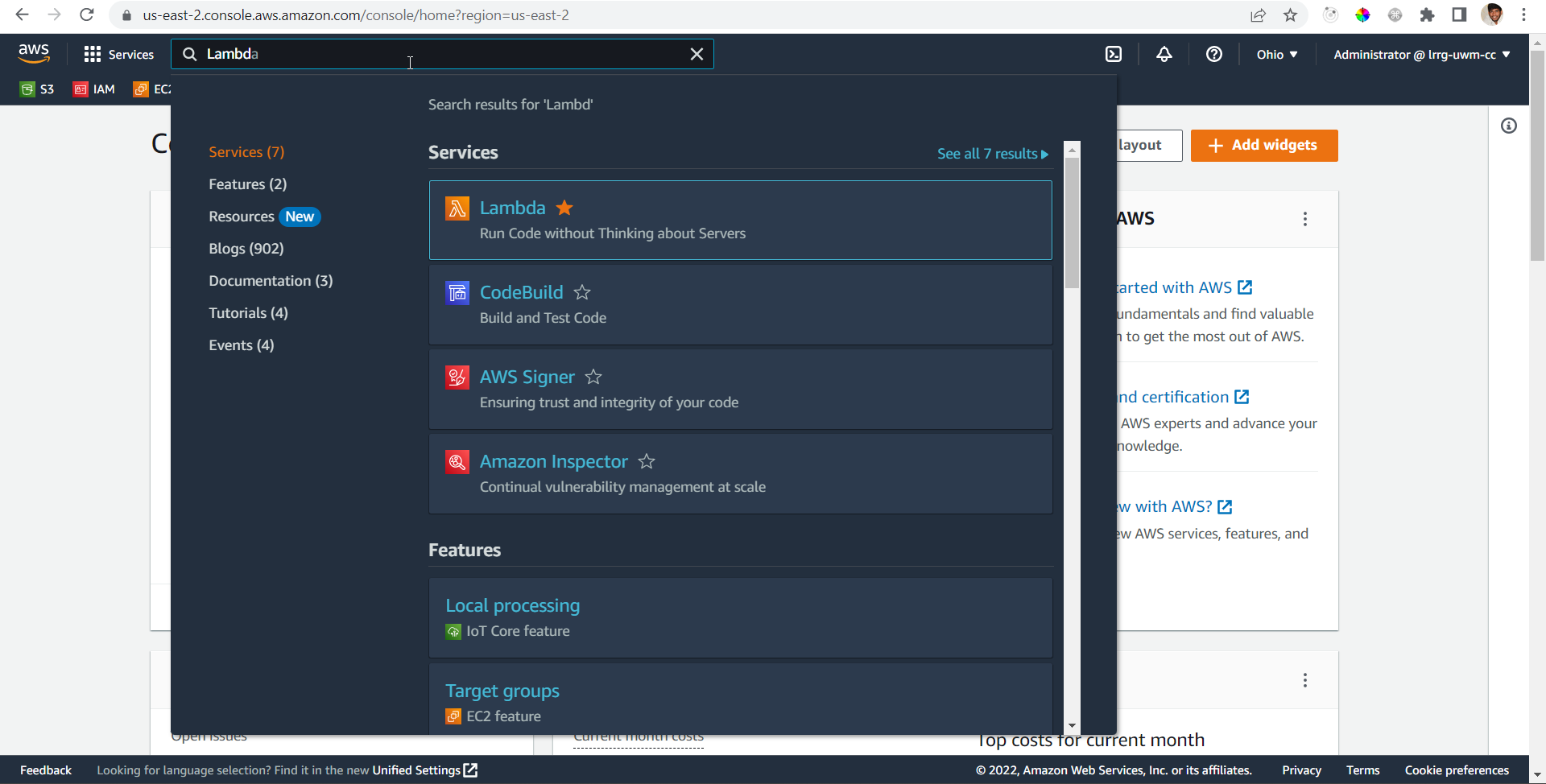

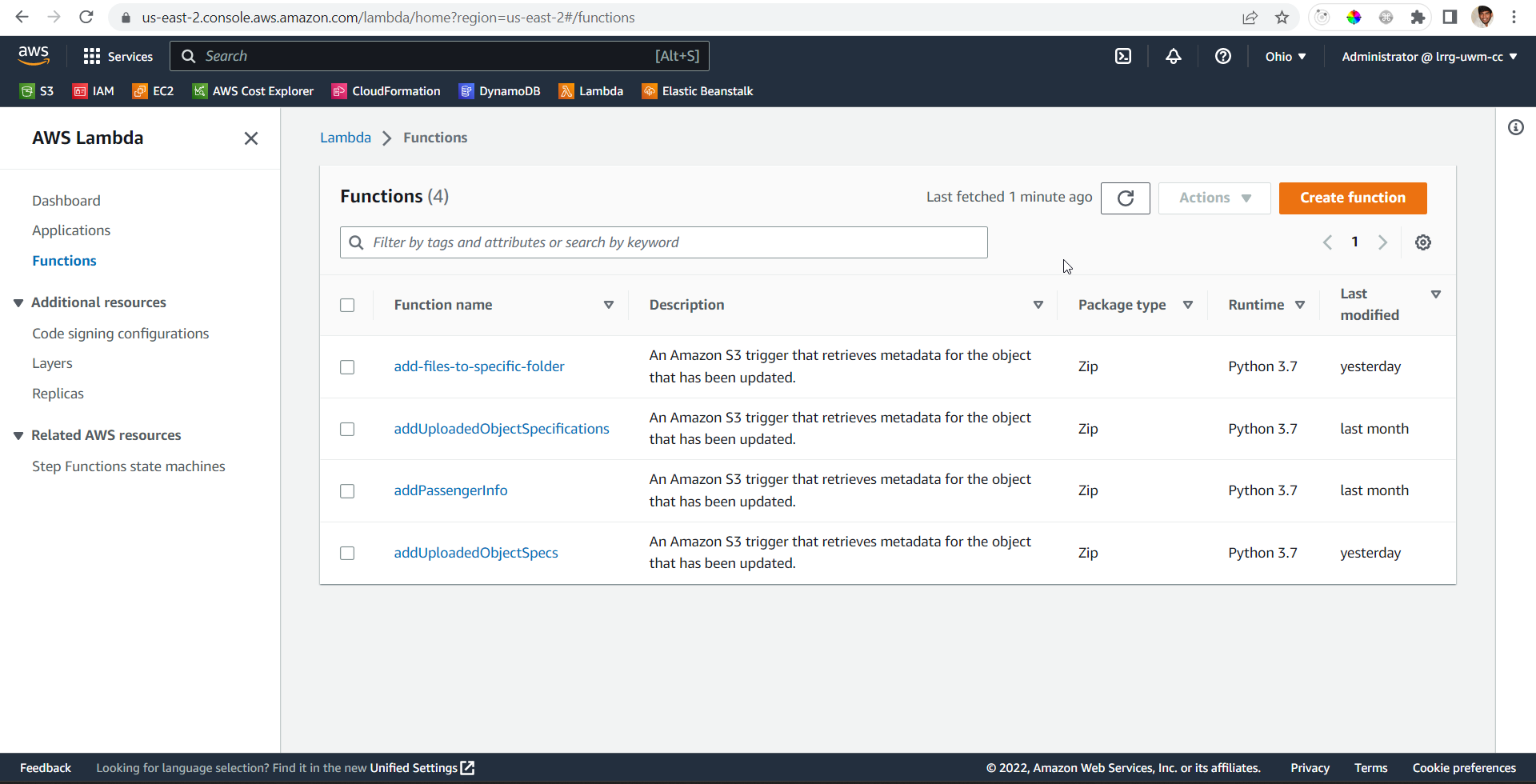

Create a Lambda function and add an S3 trigger to it

This is step 2 of 6 in this exercise. In this step, we create a lambda function and add the trigger to it. AWS Lambda is a serverless compute service that executes your code in response to events and manages the underlying compute resources for you automatically.

For more information, you can go through this documentation

- From the AWS Management Console, please do the following:

- Navigate: Console Home –> Search Lambda and click on it –> Create Function.

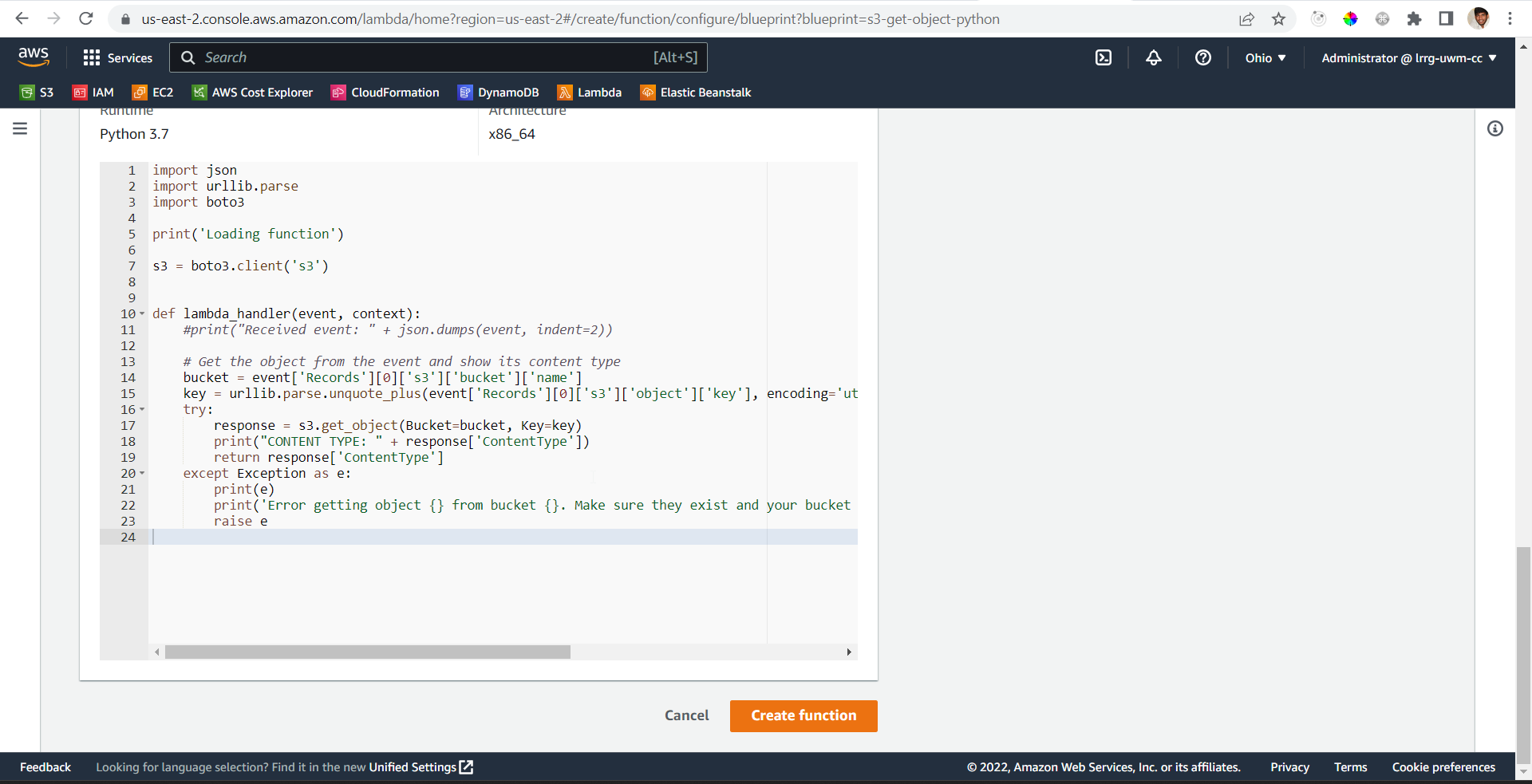

- Select use a blueprint option, search, and select s3-get-object-python and select the Get S3 object trigger

- Click Configure

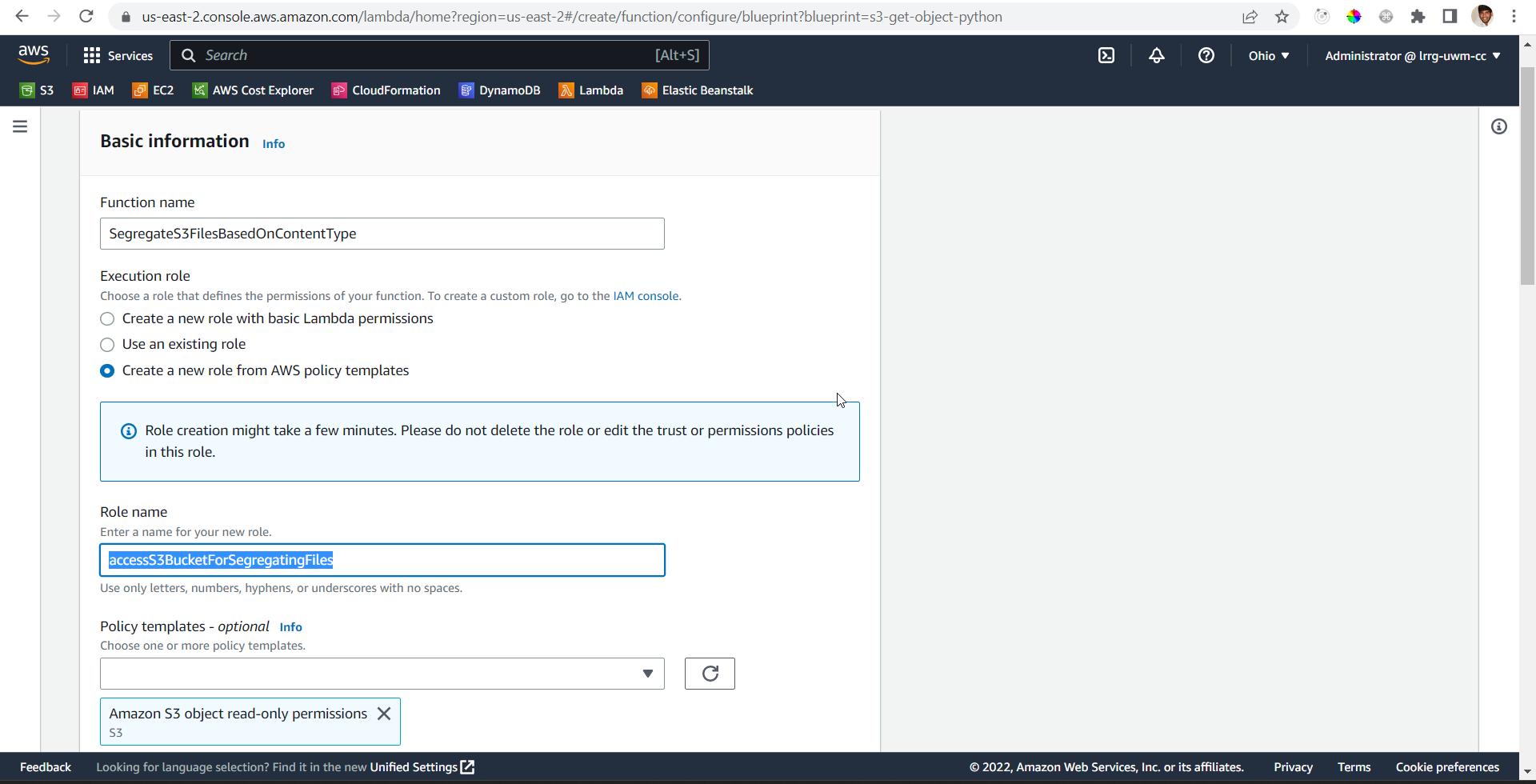

- On the Basic Information page fill the corresponding values

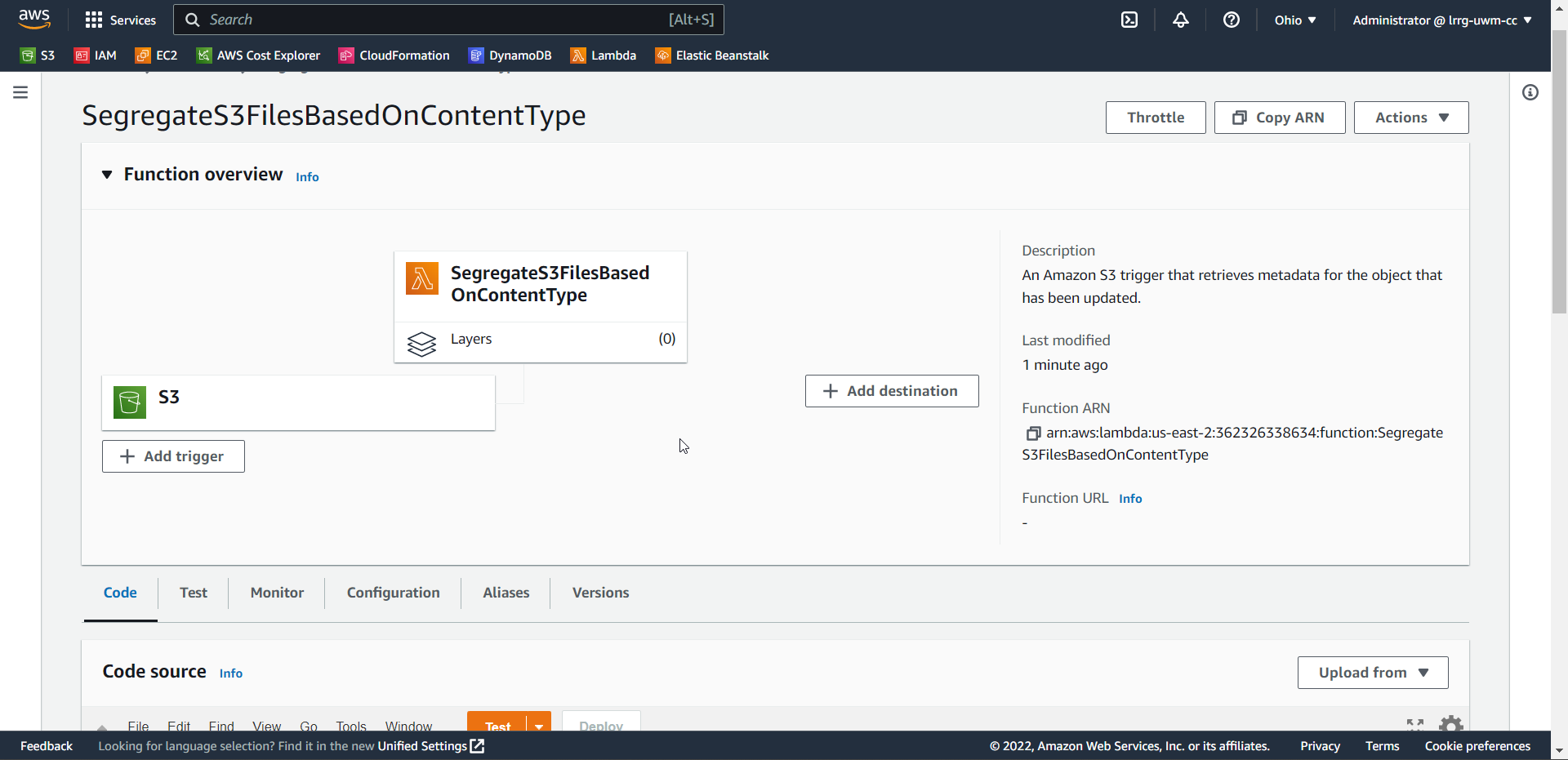

- For the Function name please give a suitable name. suggested name SegregateS3FilesBasedOnContentType

- For Execution role select Create a new role from AWS policy templates

- For Role name please give a suitable name. suggested name accessS3BucketForSegregatingFiles.

- Leave policy templates to default

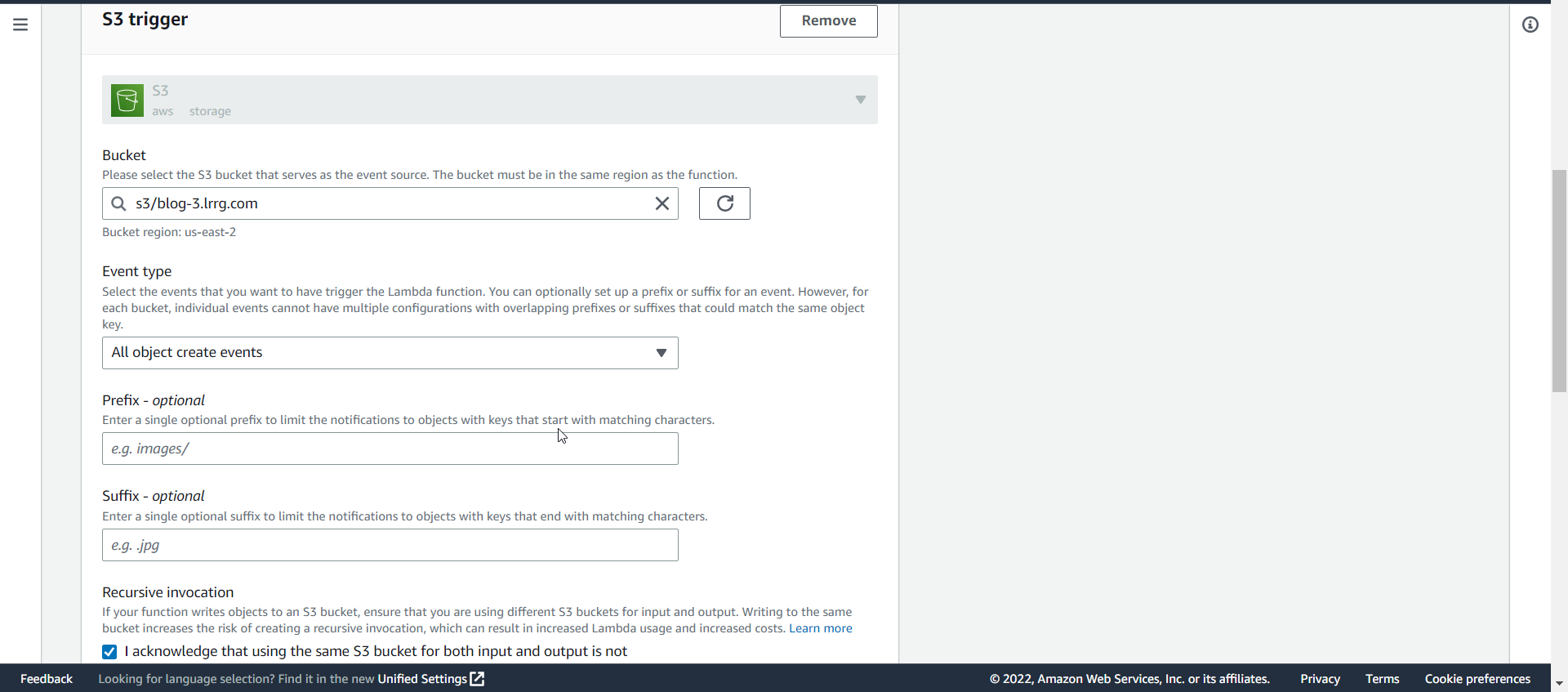

- In the S3 trigger section, for bucket please select your recently created bucket s3/blog-3.lrrg.com

- For Event type select All object create events

- Have other values to default and select the acknowledgement checkbox

- Click on create Function

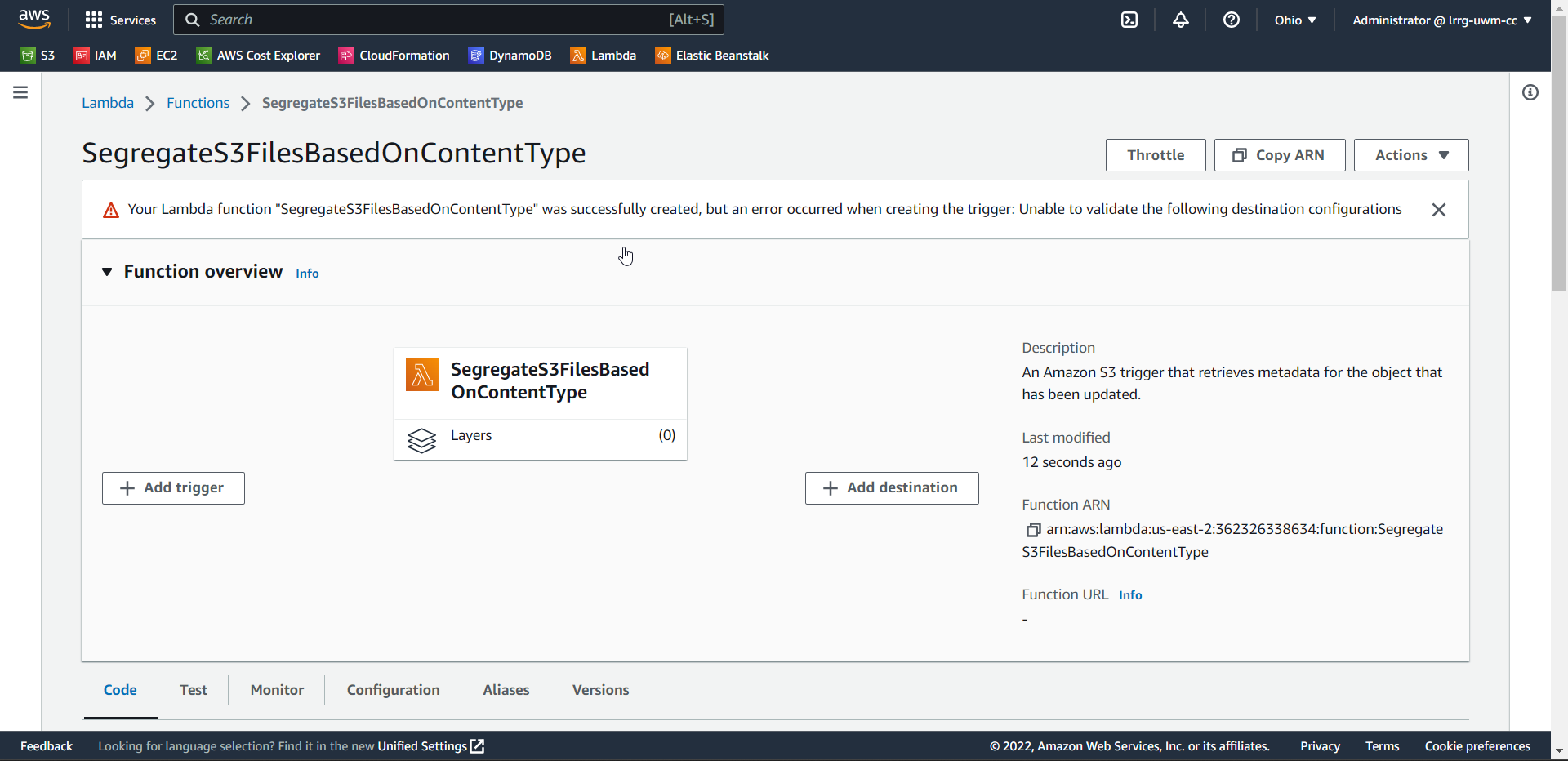

- Once the function is created if there are no errors you will see a trigger below the add trigger button

- If you see this error Your Lambda function “SegregateS3FilesBasedOnContentType” was successfully created, but an error occurred when creating the trigger: Unable to validate the following destination configurations. We can use an alternative to create an S3 trigger using Event Notifications in S3.

- If you don’t see any error move to Implement the logic step

Update the S3 event notifications

This is step 3 of 6 in this exercise. In this step, you will create a event notification for S3. You can skip this step if you don’t see any error message in step 2.

- From the AWS Management Console, please do the following:

- Navigate: Console Home –> S3 –> Select the recently created bucket –> click on properties tab

- Scroll down to the Event notifications section and click on Create event notification

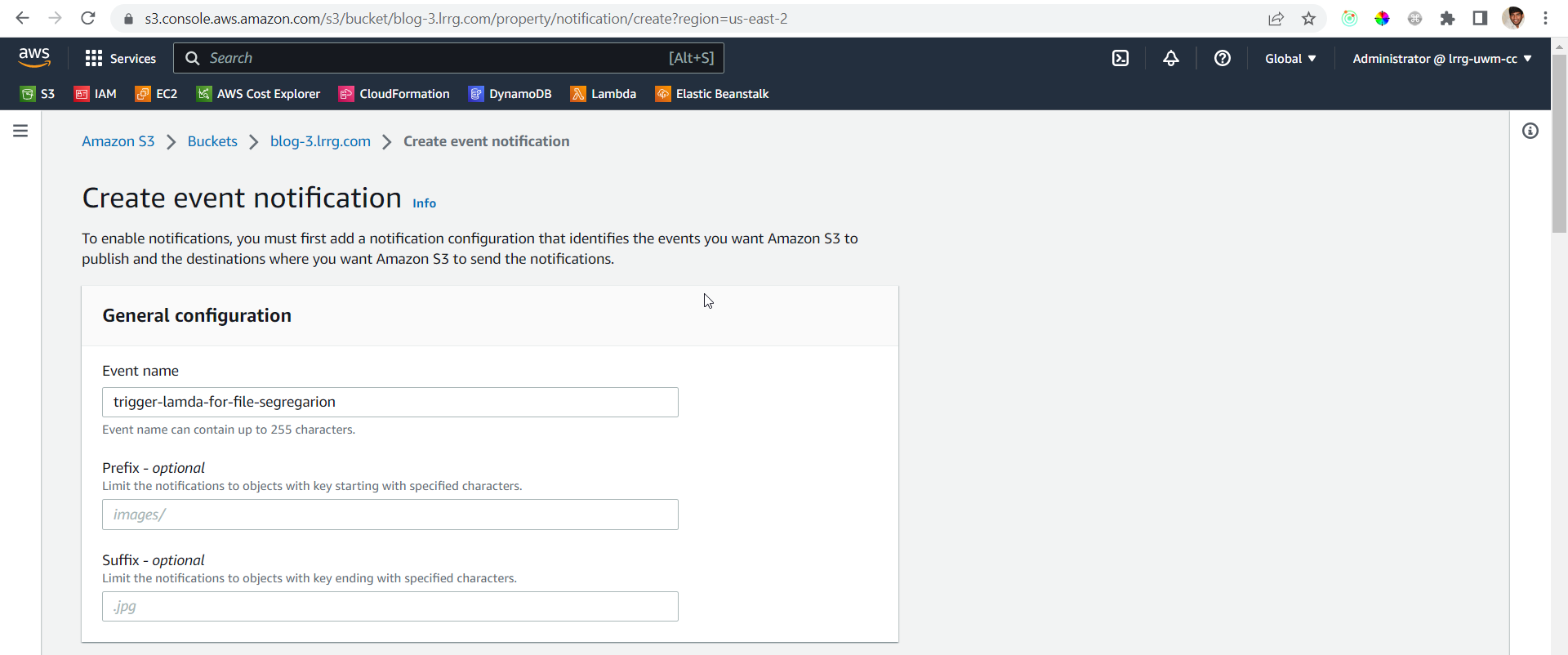

- In the Create event notification section, for Event name enter a valid name. Suggested name trigger-lambda-for-file-segregation.

- Have other values to default

- In the Event types section, check the All object create events checkbox

- Have other values to default

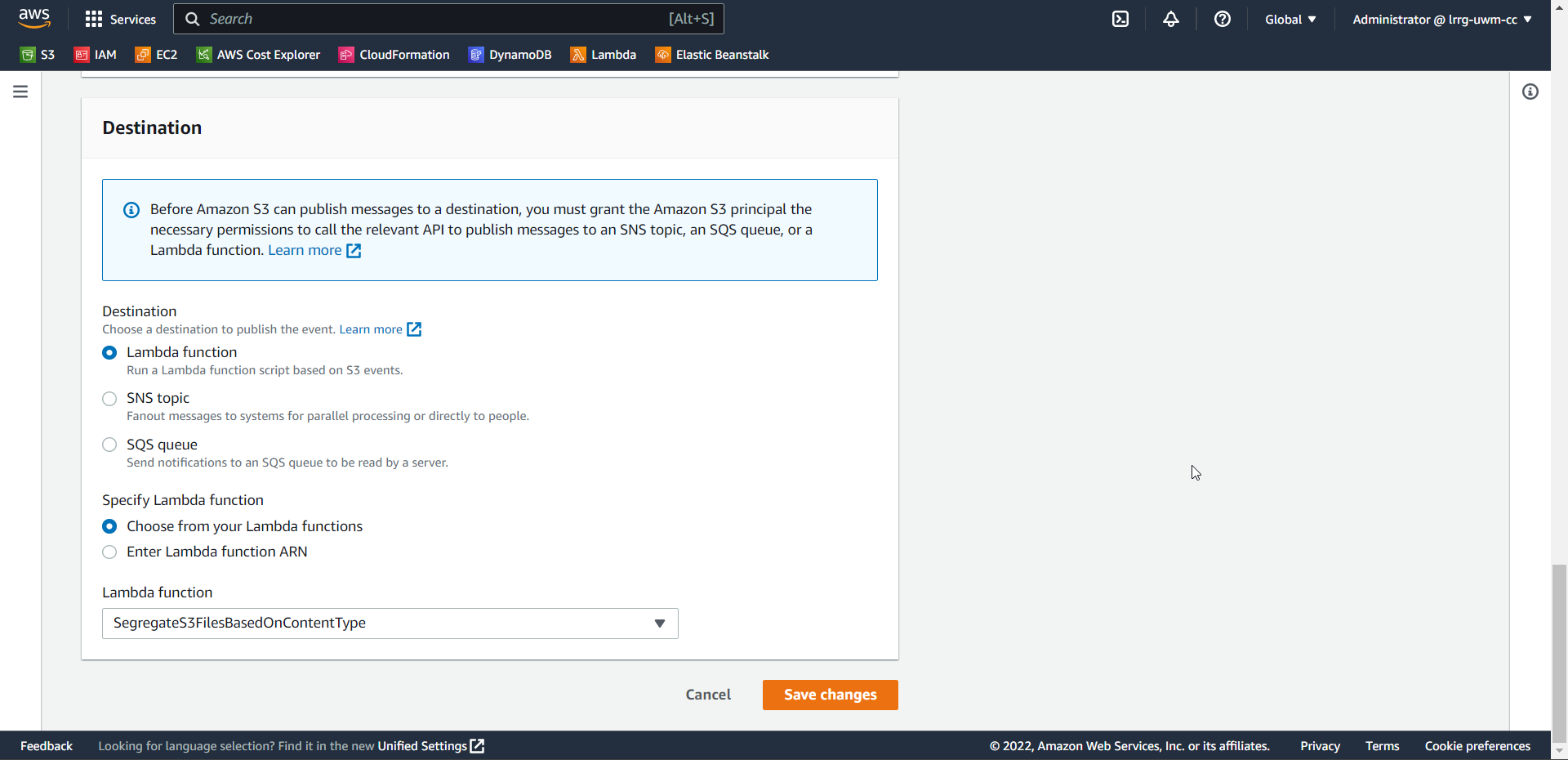

- In the Destination section, for Destination choose the Lambda function.

- For Specify Lambda function choose the option Choose from your Lambda functions

- In the Lambda function dropdown choose the lambda function which you previously created.

- Click on Save changes

- Once the creation is successful you can navigate to lambda function and see whether trigger got added or not.

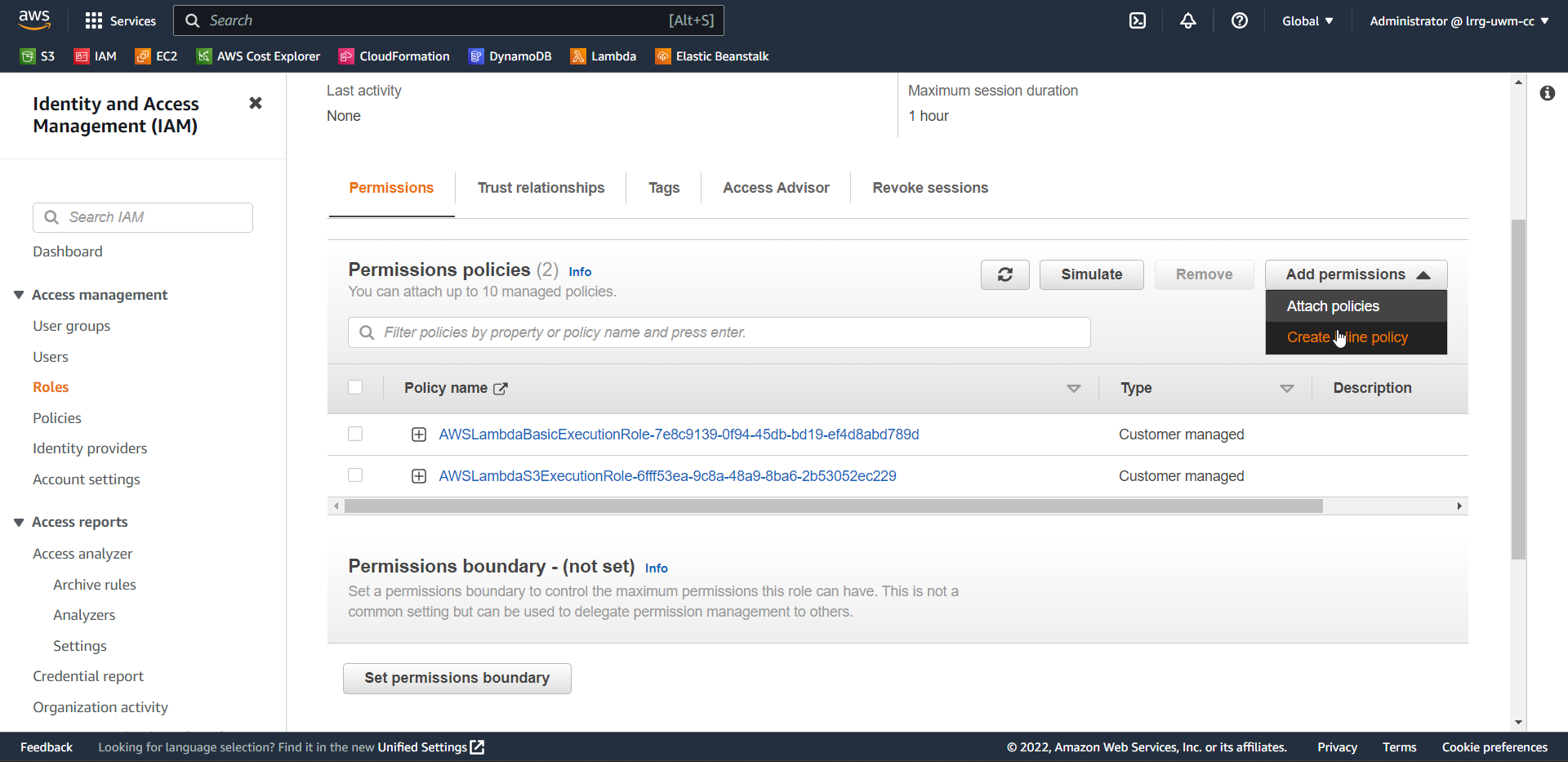

Creating IAM roles to access and alter S3 bucket objects using lambda

This is step 4 of 6 in this exercise. In this step, you will create IAM roles which are required for lambda to create or alter files in the S3 bucket.

- From the AWS Management Console, please do the following:

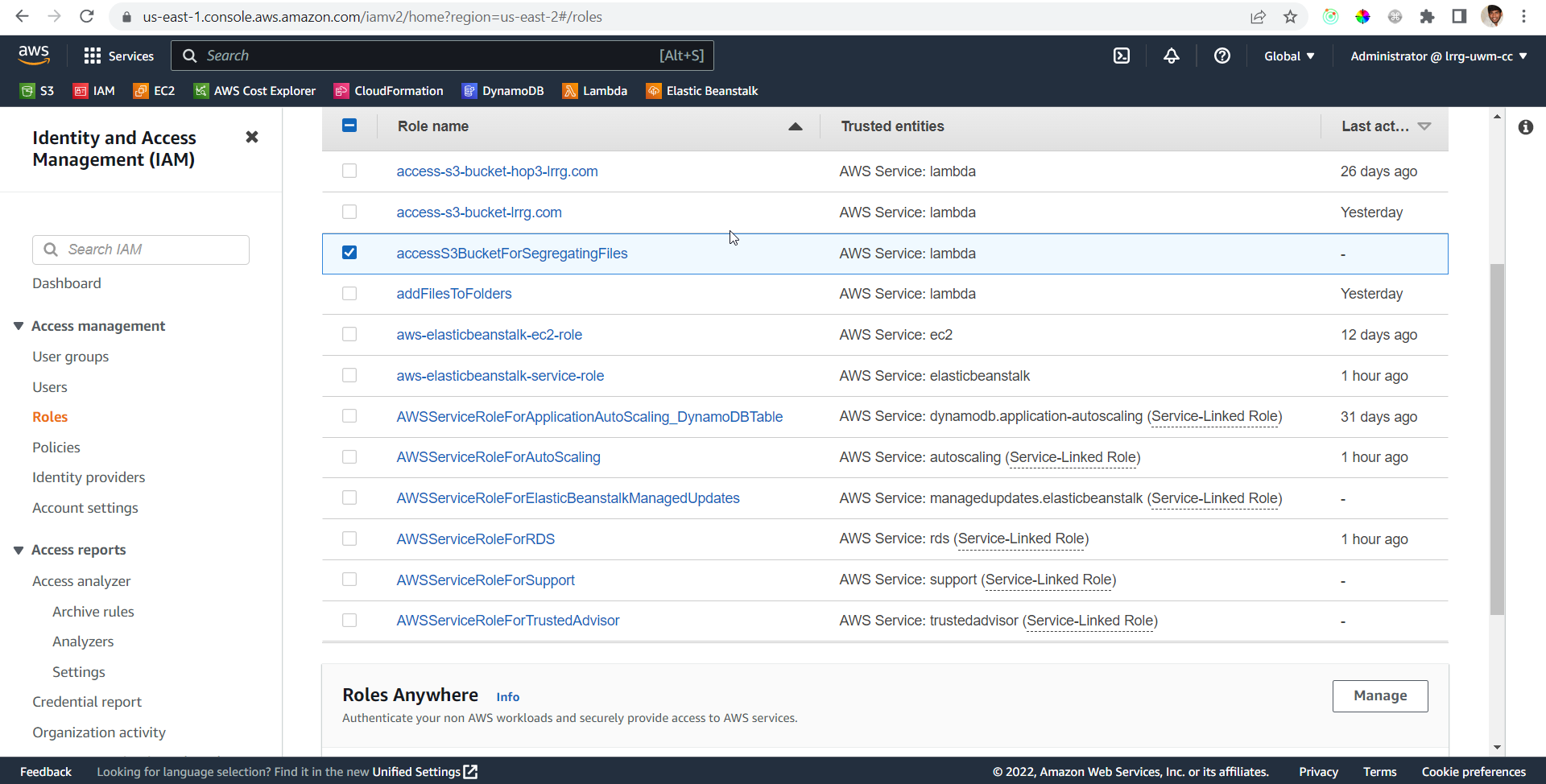

- Navigate: Console Home –> Search IAM and click on it –> click on Roles –> click on the recently created role accessS3BucketForSegregatingFiles

- Click on Add Permissions menu and select Create inline policy

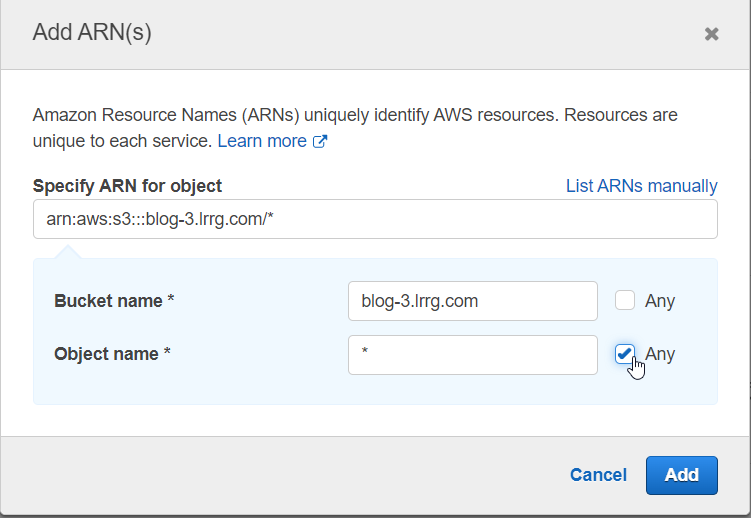

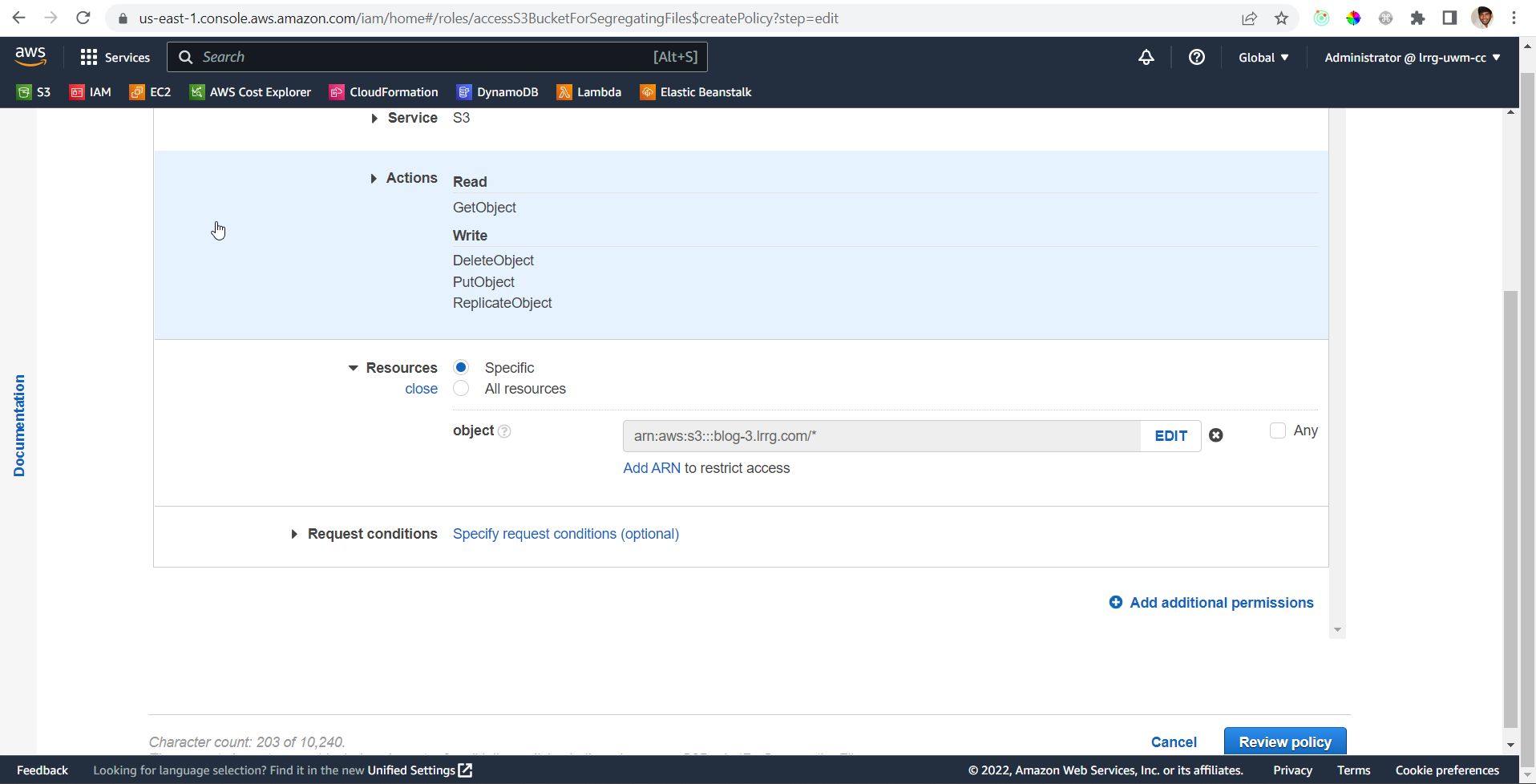

- From service choose S3

- From Actions choose GetObject, DeleteObject, PutObject, ReplicateObject

- For Resources click on Add ARN and fill in the value of the bucket name with the recently created bucket blog-3.lrrg.com, for Object name check the Any check box and click on Add

- Click on Review Policy

- Enter a valid name in the next step. Suggested name S3-Bucket-CRUD-Permission and click on Create Policy

- Once the creation is successful.

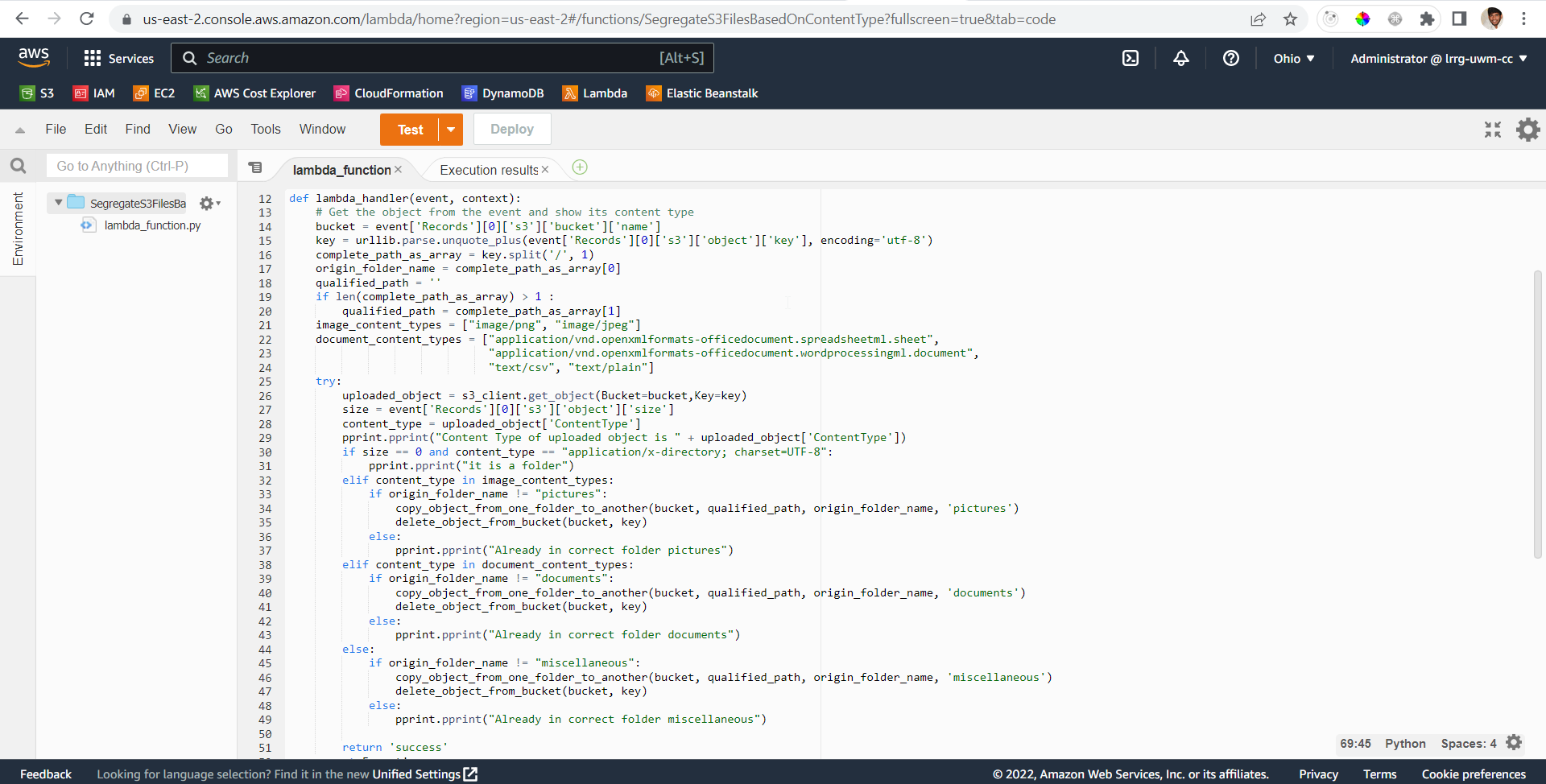

Implement the logic to segregate files

This is step 5 of 6 in this exercise. In this step, you will write logic to do copy and delete files in S3 from the lambda function.

The ideology behind this implementation is as soon as the object is uploaded to s3 this lambda gets triggered. The event parameter in the lambda function has all the info related to the uploaded object. So, I will retrieve the content type from the event object and I will validate the content type of the uploaded object with known values (Ex: for CSV file, as we know the content type will be “text/csv”, and for png, the content type would be “image/png”). Once the content type is matched, I will check whether the uploaded file is in its designated folder or not. If not, I will copy the object to the designated folder and delete it from the origin folder.

- The lambda function has 3 definitions inside it

- lambda_handler

- delete_object_from_bucket

- copy_object_from_one_folder_to_another

- The lambda_handler has the logic of retrieving the content type and matching it with designated content types and calling the copy_object_from_one_folder_to_another and delete_object_from_bucket based on the need.

- delete_object_from_bucket has the logic of deleting the files from s3.

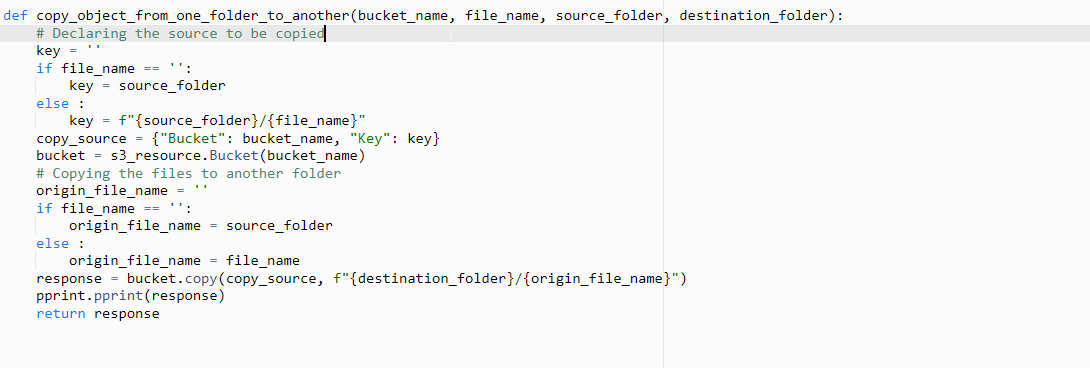

- copy_object_from_one_folder_to_another has the logic of copying the s3 files from one folder to another.

Download the zip file to view whole code

Validate the results

This is step 6 of 6 in this exercise. In this step, you will test your logic and see if it is worked as expected.

- We can test the code by uploading different types of files to s3

- I have attached a video of testing different scenarios.

- Code seems to be working as expected