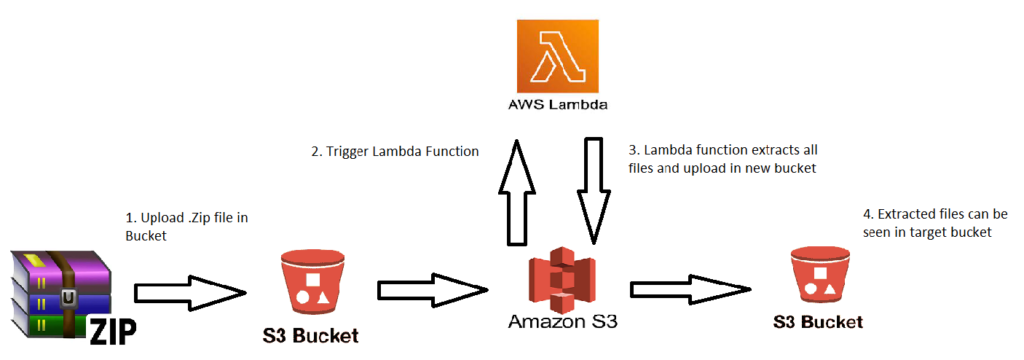

Here, we are going to see how to extract Zip files from the S3 bucket to the target bucket using the Lambda function.

Amazon S3 is used for file storage, where you can upload or remove objects. An object is a file and any metadata that describes that file. When the object is in the bucket, you can open it, download it, and move it. Amazon S3 offers us to store the object in any format like zip, png, txt, etc. Though we can upload an object as a zip file, S3 wouldn’t allow you to see the objects within the zip file. So, we are going to use a lambda function to access the S3 bucket to extract the zip file and take all the objects from the zip file, and put them into a folder in the destination bucket.

In order to write a lambda function to trigger an S3 bucket we are required to have permission to read that bucket. By, adding the bucket policies to the specific user or role, you will have access to the bucket.

What is Bucket Policy?

An S3 bucket policy that allows you to manage access to specific Amazon S3 storage resources. When you create a new Amazon S3 bucket, you should set a policy granting the relevant permissions to the data forwarder’s principal roles. Bucket policies are an Identity and Access Management (IAM) mechanism for controlling access to resources.

To know more about bucket policies and IAM user policies. Click here

AWS Lambda

AWS Lambda is a service that performs serverless computing, which involves computing without any server. The code is executed based on the response of events in AWS services such as adding/removing files in the S3 bucket, updating Amazon dynamo dB tables, HTTP requests from Amazon API gateway, etc. AWS Lambda supports languages such as Java, NodeJS, Python, C#, Go, Ruby, and Powershell. (Note: AWS Lambda will work only with AWS services)

To work with AWS Lambda, we just have to push the code in the AWS Lambda service. All other tasks and resources such as infrastructure, operating system, maintenance of the server, code monitoring, logs, and security are taken care of by AWS.

Advantages of Lambda

- Ease of working with code

- Log Provision

- Multi-Language Support

- Ease of code authoring and deploying

- Billing based on Usage

Need for Lambda

Using Lambda, you can add your own code to Amazon S3 requests to modify and process data. When you send a request to your lambda access point, Amazon S3 automatically calls your Lambda function and processes the output based on the request.

Since the S3 bucket does not allow us to view the files within the zip file we are using a lambda function to extract the zip file and put all files in a folder in the destination bucket.

By using the lambda function it helps us to skip unnecessary manual work:

- We have to download the zip file from the bucket and then extract them in your local PC

- If you are required to view the object from the bucket, you need to upload the object again

Using the lambda function, we can extract the zip file in the bucket and access the object. Moreover, it is quicker and more reliable.

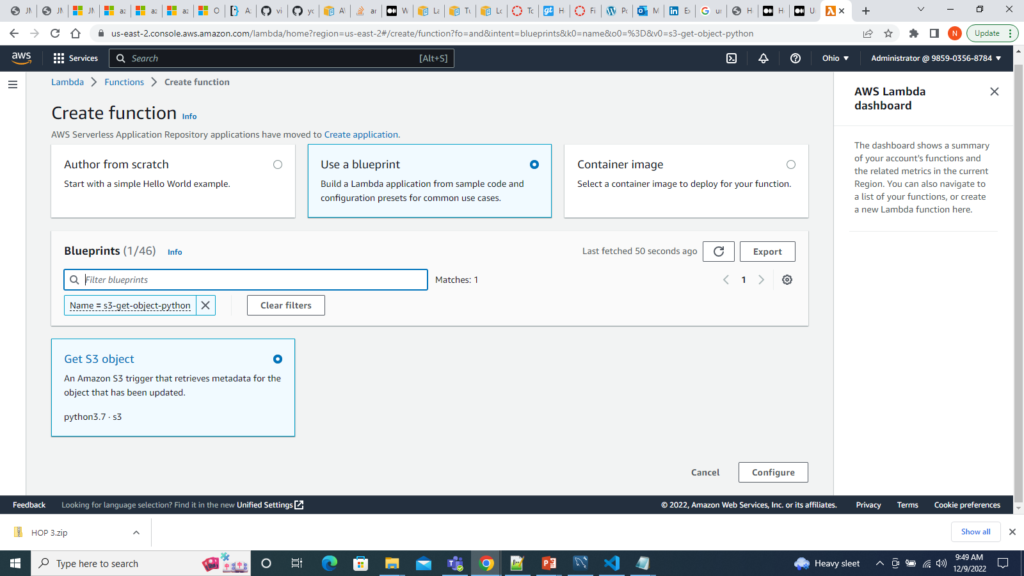

To create a Lambda function with the console

Steps to be followed:

- In your AWS management console, search for Lambda, and the function page of lambda console will be displayed.

- Choose Create function.

- Select Use a blueprint.

- In the Filter blueprints box, search S3-get-object-python and select the S3-get-object-python blueprint.

- Choose Get-S3-Object

6. Under Basic Information, follow the below steps

- Provide your Function name

- For Execution role, choose Create a new role from AWS policy templates.

- For Role Name, provide a name. Any role name works, it should be unique.

We are choosing the execution role as Create a new role from AWS policy templates because it enables us to provide policies specifically for that role user we have created.

7. Under the S3 trigger, follow the below steps

- For S3 bucket, choose a bucket. (Note: The bucket region should be the same as the function region)

- For Event Type, in drop-down under All object create event, choose PUT

PUT event, it helps in the creation of a new object or update of an existing object. Since we are extracting the existing zip file (i.e object) and creating a new folder to store all the files within the zip folder we are using PUT event to implement our function.

8. Click on the Acknowledgement check box and proceed to Create function

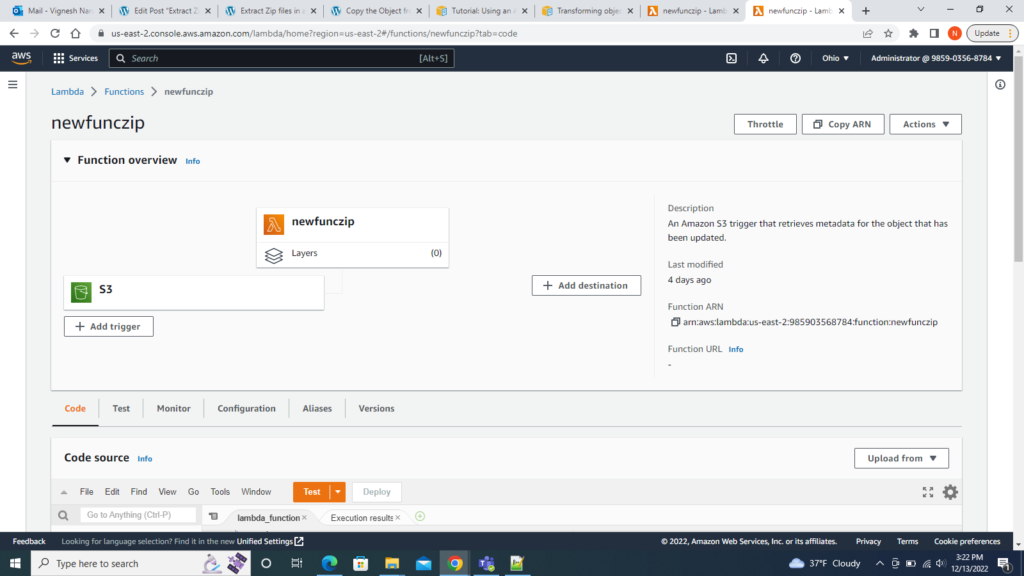

9. You will be displayed with Function overview, under the code tab you can see the template for the function

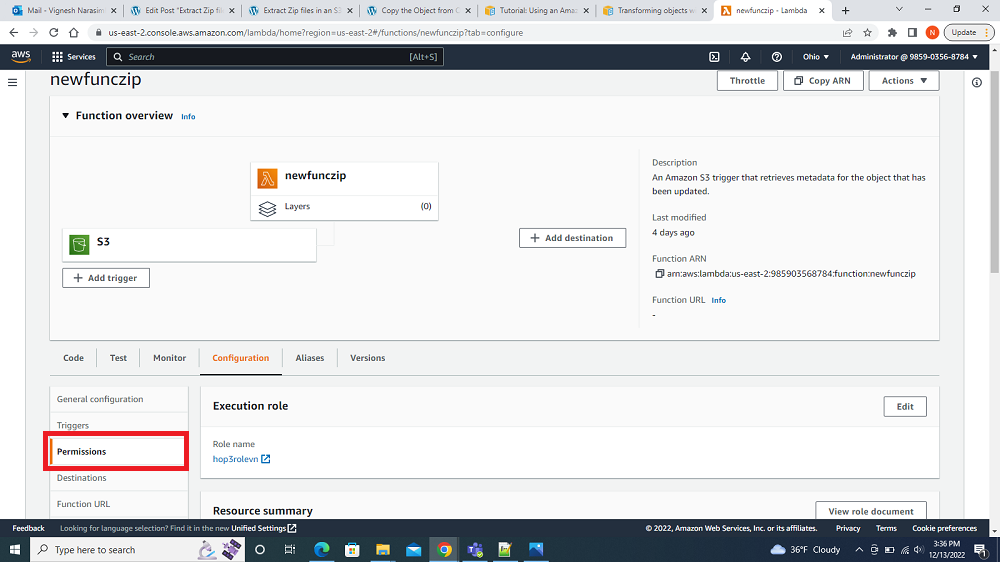

Set up your Policies and Permission

In order to access and modify your bucket you are required to provide certain policies to the role name which you have create earlier.

Steps to be followed:

- In the Function overview page, click on the Configuration tab

- Under the Configuration tab, in the left menu bar choose Permissions

- In Execution role, you will see the role name which you have created.

- Click on the Role name

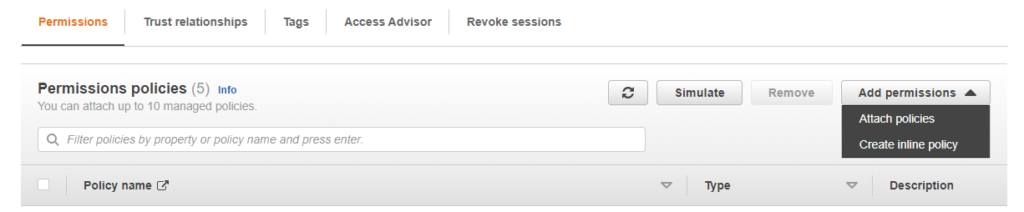

- In IAM dashboard, under Permissions tab you can see Permissions policies

- Click Add permissions, and choose Create inline policy

- We are going to choose two service S3 and Cloudwatch logs

- S3 provides us all the access to create, and modify the objects within the bucket.

- Cloudwatch logs provide the logs of our actions which helps us to check if anything goes wrong. We change see the logs and find the error.

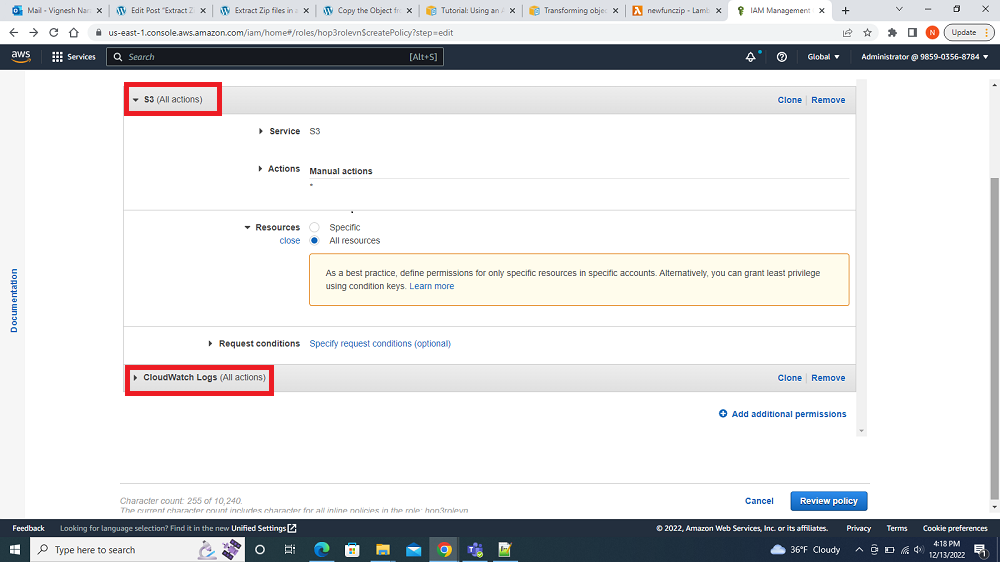

- In Create Policy, under select a service

- In Service, click choose a service and search for S3. Select the S3

- In Action, check the box under Manual Actions, click All S3 actions (s3.*)

- In Resources, click the radion button All resources

- Don’t click Review policy, we are required to add one more service.

- Now, click Add Additional permissions

- Follow the same step, search for Cloudwatch logs

- In Action and Resources, do the same as the previous step.

- Now Click Review Policy

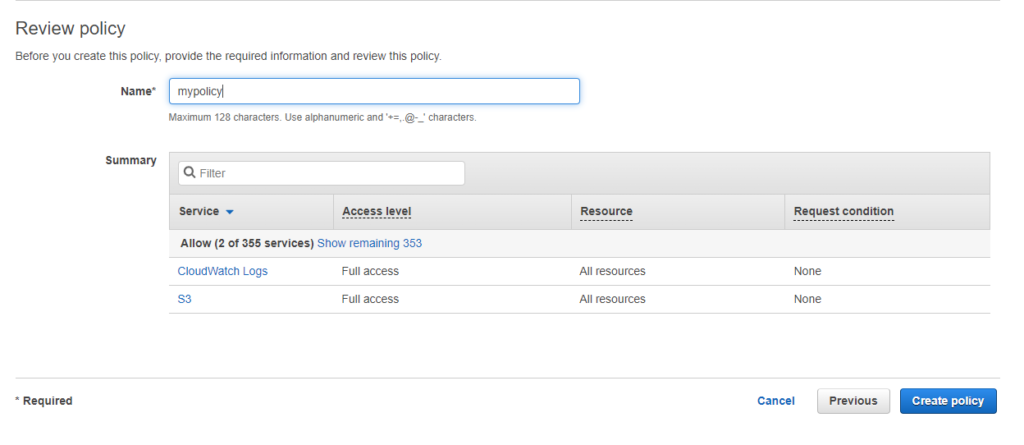

- In Review Policy, you will see the policies selected from the previous step. Provide a Name and Click Create Policy

Once all the above steps are done. The policy will be added to the role that you have created. Now you will be able to do any action to the bucket which you have selected.

Implement extraction of Zip file using Lambda function

The basic steps required to run the program:

- Read the zip file from S3 using the Boto3 S3 resource Object into a BytesIO buffer object

- Open the object using the zipfile module.

- Iterate over each file in the zip file using the namelist method

- Write the file back to another bucket in S3 using the resource meta.client.upload_fileobj method

Steps to be followed:

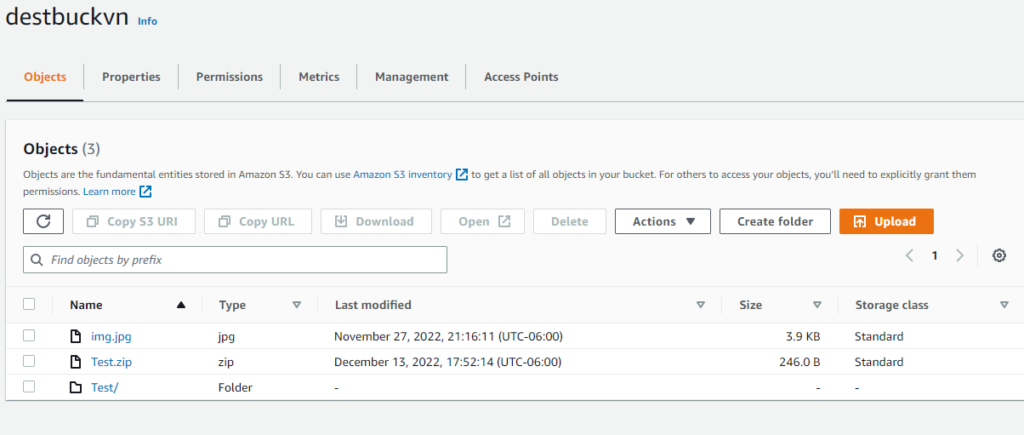

- Upload a zip file to the S3 bucket ( i.e source bucket).

- Go to the Function overview of your lambda function and click on the Code tab. Run the program code.

- Using lambda function extract all the files inside the ZIP file and uploads it into a new S3 bucket (target bucket)

Let’s look at the piece of code and understand how it works.

In order to identify and access the zip folder within the bucket we are required to import the library zipfile within our code.

- import zipfile – The ZIP file format is a common archive and compression standard. This module provides tools to create, read, write, append, and list a ZIP file.

- zipfile.ZipFile – This class is used for reading and writing ZIP files.

import json

import boto3

from io import BytesIO

import zipfile

def lambda_handler(event, context):

s3_resource = boto3.resource('s3')

source_bucket= 'destbuckvn'

target_bucket= 'buckzipvn'

my_bucket = s3_resource.Bucket(source_bucket)

for file in my_bucket.objects.all():

if(str(file.key).endswith('.zip')):

zip_object= s3_resource.Object(bucket_name=source_bucket,key=file.key)

buffer = BytesIO(zip_object.get()["Body"].read())

z = zipfile.ZipFile(buffer)

else:

print(file.key+ "is not a zip file")

- BytesIO(zip_object.get()[“Body”].read()) – The source file(zip file) is stored as bytes in-memory buffer and extracted using the python zip file package. It identifies the file which has .zip as an extension and read all objects within the zip file

zipfile.ZipFile() – Opens a ZIP file, where file can be a path to a file.

The below code helps us in fetching all the filenames in the zip file from the source bucket.

ZipFile.namelist() – Return a list of archive members by nameZipFile.open() – Access a member of the archive as a binary file-like object. name can be either the name of a file within the archive or aZipInfoobject.

for filename in z.namelist():

file_info = z.getinfo(filename)

try:

s3_resource.meta.client.upload_fileobj(z.open(filename),Bucket=target_bucket,Key=f'{filename}')

except Exception as e:

print(e)

- s3_resource.meta.client.upload_fileobj – It helps in uploading the file in the target bucket

Now, we are required to add the above code together, the final code is below. Replace the existing code in the function with the below code.

import json

import boto3

from io import BytesIO

import zipfile

def lambda_handler(event, context):

s3_resource = boto3.resource('s3')

source_bucket= 'destbuckvn'

target_bucket= 'buckzipvn'

my_bucket = s3_resource.Bucket(source_bucket)

for file in my_bucket.objects.all():

if(str(file.key).endswith('.zip')):

zip_object= s3_resource.Object(bucket_name=source_bucket,key=file.key)

buffer = BytesIO(zip_object.get()["Body"].read())

z = zipfile.ZipFile(buffer)

for filename in z.namelist():

file_info = z.getinfo(filename)

try:

s3_resource.meta.client.upload_fileobj(z.open(filename),Bucket=target_bucket,Key=f'{filename}')

except Exception as e:

print(e)

else:

print(file.key+ "is not a zip file")

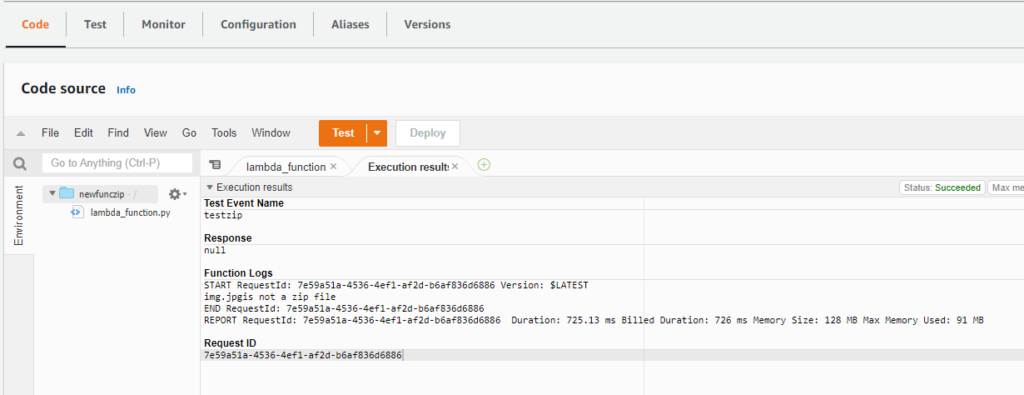

Then, deploy and test the code.

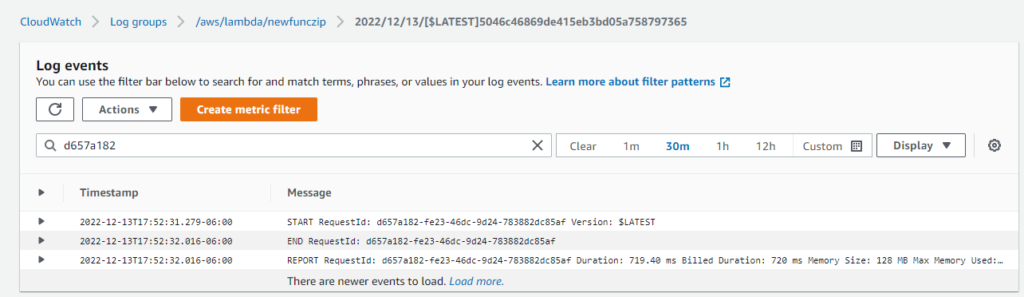

Once it executes successfully, you can check for the Request ID in cloud watch logs to show logs.

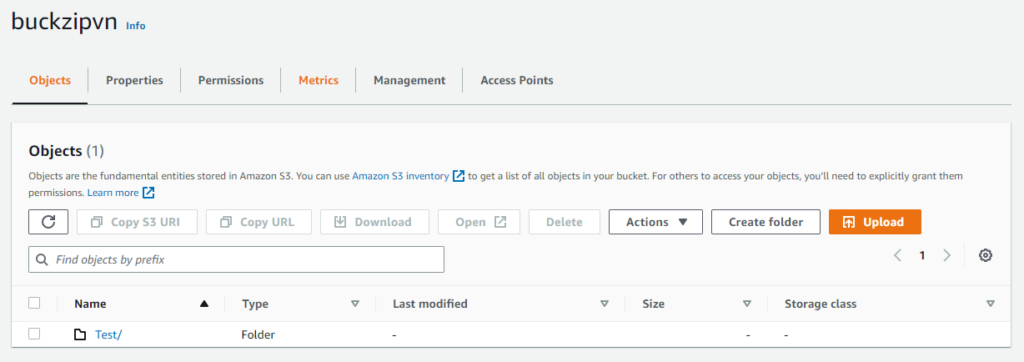

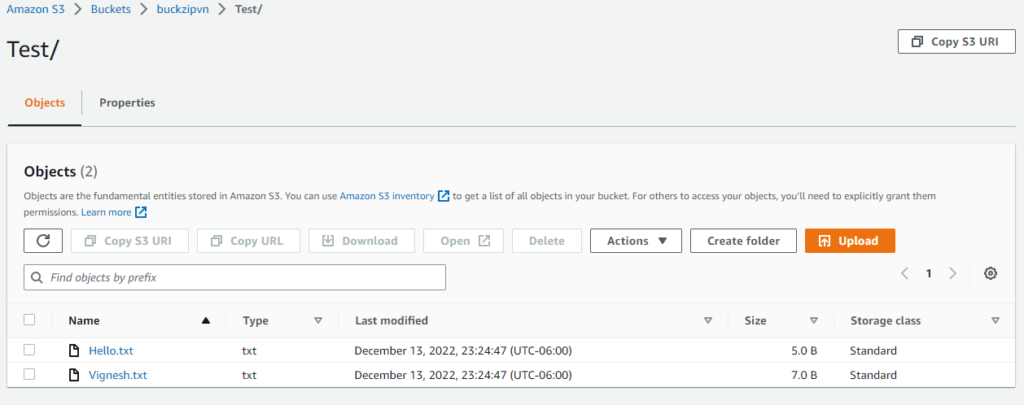

Check the Target bucket. A folder would have been created with all files in it.

Now, you will be able to access the files in the target bucket

Even, you can choose your target bucket as the same bucket and extract the files within the same bucket too. In that case, your source and target bucket would be the same

Hope you find my blog informative. Using the Lambda function we will be able to unzip file within the bucket enabling us to access the object in it. AWS Lambda provides various services and has support for a serverless framework which makes writing and deploying AWS Lambda code easy. Additionally, it automates the services making things easier and helping us skip manual work. Hence, we are able to access the object in zip file which was restricted by the bucket to view in zip mode.

Thanks for reading my blog!!