In this article, we are going to take a look at how to copy an object from one AWS S3 Bucket to another S3 Bucket using Boto3 python software development kit (SDK).

Terminologies

AWS S3 bucket

An Amazon S3 bucket is a public cloud storage resource available in Amazon Web Services’ (AWS) Simple Storage Service (S3), an object storage offering. Amazon S3 buckets, which are similar to file folders, store objects, which consist of data and its descriptive metadata. A user first creates a bucket and gives it a globally distinctive name in the desired AWS region. To cut expenses and latency, AWS advises customers to select regions that are close to their location. The user then chooses an S3 tier for the data after creating the bucket, with each tier having a different level of redundancy, cost, and accessibility. Different S3 storage tiers of objects can be stored in a same bucket.

Software Development Kit

A software development kit (SDK) is a set of tools provided by the manufacturer of a hardware platform like AWS, Azure, operating system, or programming language. Any components that a developer would require while building new applications for that particular product and its ecosystem will be provided by a good SDK.

Some examples of SDK are Java development kit (JDK), the MacOS X SDK, the iPhone SDK, boto3 SDK.

Boto3

This documentation provides detailed information about boto3 SDK: https://boto3.amazonaws.com/v1/documentation/api/latest/index.html

Initial Steps:

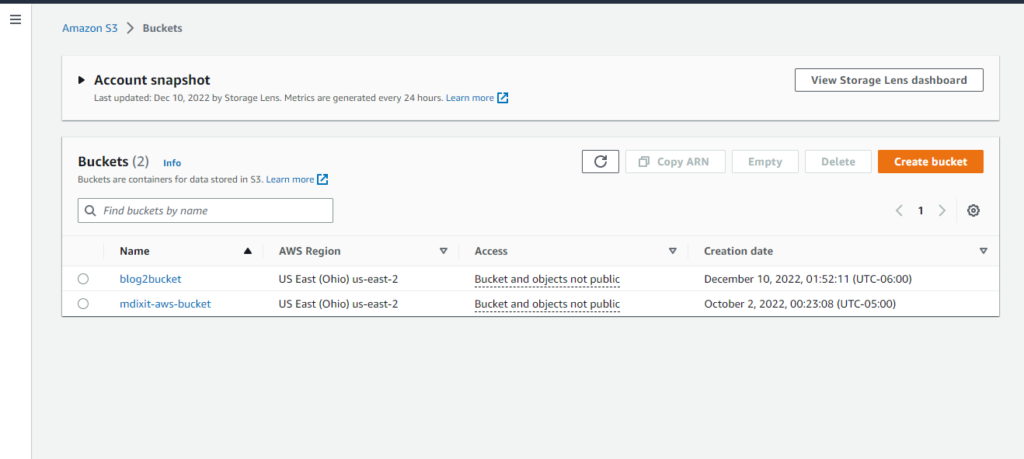

Create two S3 buckets to copy objects

Log in to AWS account with correct credentials.

Steps to create an AWS S3 Bucket: Get Some Personal Storage (AWS).

For the purpose of this Blog, We need two S3 buckets to copy files from one S3 bucket to another S3 bucket.

Open the remote Desktop window of the ubuntu desktop using bitvise ssh client.

Follow the steps in this link to create development PC in cloud under “Do project on AWS” section: https://uwm-cloudblog.net/projects-all/project-2-development-pc-in-the-cloud/hands-on-project-2-development-pc-in-the-cloud/

Run these commands after opening the ubuntu desktop

This command will make necessary updates to ensure smooth execution

sudo apt updateThis command will be remove unnecessary or outdated packages which only end up wasting space on the PC.

sudo apt autoremove -y

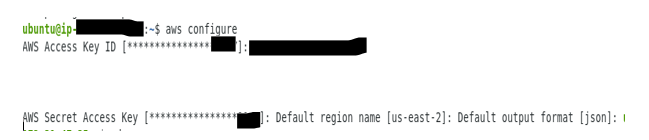

AWS Configuration

In order to make sure our development environment can access AWS resources, we need to configure AWS keys in our development PC.

First, open the terminal on the development PC and then type this command for AWS keys configuration.

aws configureThis how command line will look like after typing the command.

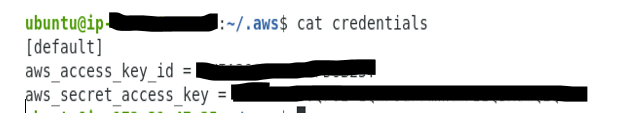

After this step, type this command.

cd .aws

cat credentialsThe credentials will be displayed which means AWS resources can be accessed by the program in the development IDE. This is what you will see after executing the command.

Program Execution

To execute the program, we are using VSCODE IDE.

Open VSCODE on your development PC.

If VSCODE is not installed, follow this link: Install Development Tools on Your Cloud PC

After opening VSCODE, create a python file with name of your choice. For purpose of this blog, I am using s3copy.py.

Note: Make sure you select the correct interpreter using ‘ctrl+shift+P’ before executing your program.

Boto3 installation

Check if boto3 is installed before executing your program as it may show that ‘boto3’ not existing error. Follow these steps if boto3 library is not getting imported.

In VSCODE terminal, type these commands to download boto3

pipenv install numpypipenv install boto3Once boto3 is installed correctly, the VSCODE IDE will successfully import boto3

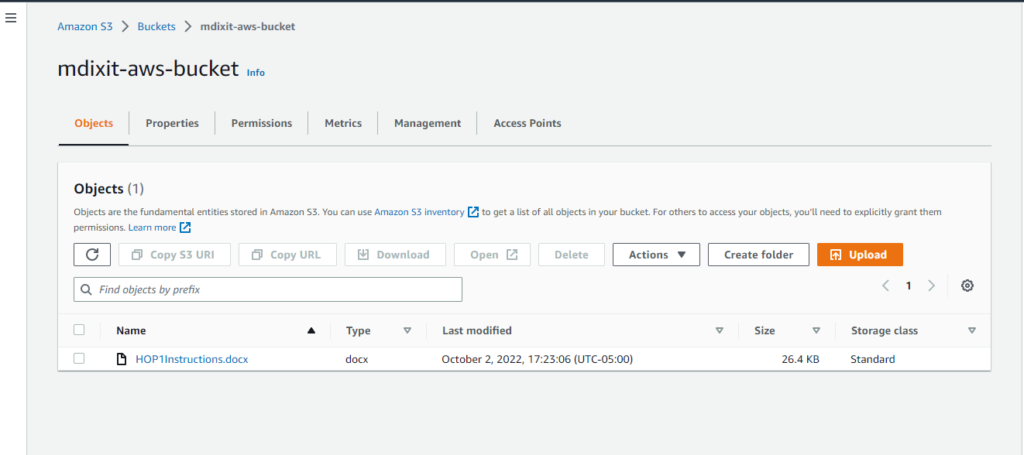

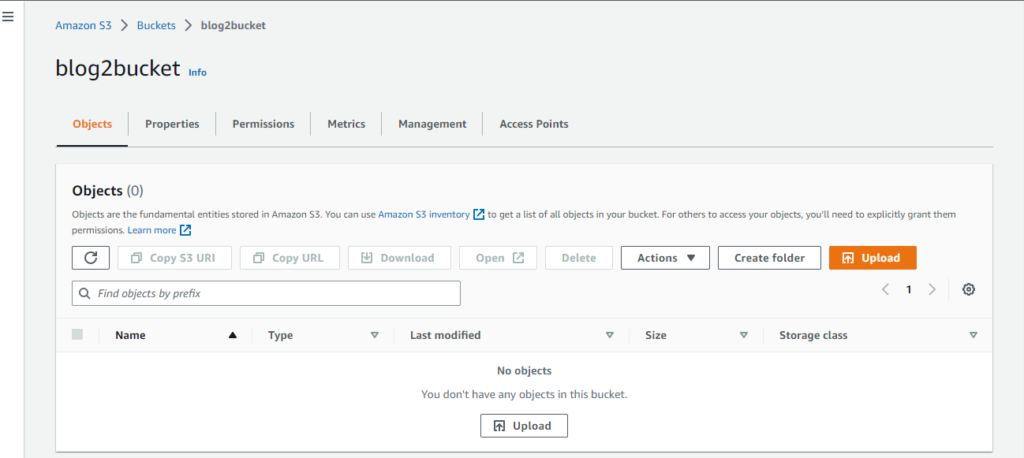

S3 Buckets before Program execution

These are details of the S3 buckets used in the program:

mdixit-aws-bucket: The bucket1 from which an object will be copied to another bucket, bucket2.

blog2bucket: The bucket2 where object from bucket1 will copied.

HOP1instructions.docx: The object which will be copied in bucket2.

These are screenshots of S3 buckets before execution:

Program Execution

This is the code which will be used to copy objects from one S3 bucket to another S3 bucket using python SDK.

import sys

import boto3

import botocore

def main():

#The bucket1, bucket1_file, bucket 2 should be inserted in command line during execution.

#bucket1:The s3 bucket from which file will be copied

#bucket1_file: The file which will be copied.

#bucket2: The s3 bucket where file will be copied.

command_line = sys.argv[1:]

#if appropriate number of parameters are not inserted then this error message will be displayed

if len(command_line) < 3:

print('Not enough parameters.Try python s3copy.py <bucket1> <bucket1_file> bucket2>')

sys.exit(1)

bucket1 = command_line[0]

bucket1_file = command_line[1]

bucket2 = command_line[2]

bucket2_file = bucket1_file

print('From - bucket: ' + bucket1)

print('From - object: ' + bucket1_file)

print('To - bucket: ' + bucket2)

print('To - object: ' + bucket2_file)

# Create an S3 Client

s3_client = boto3.client('s3')

# Copy the object from bucket1 to bucket2

try:

print('Copying object from bucket1 to bucket 2')

copy_file = {

'Bucket': bucket1,

'Key': bucket1_file

}

s3_client.copy(copy_file, bucket2, bucket2_file)

print('The file is Copied in the bucket 2')

#These are different error messages displayed in case of any invalid action

except botocore.exceptions.ClientError as e:

if e.response['Error']['Code'] == "404":

print("Error: Not Found, invalid parameters")

elif e.response['Error']['Code'] == "400":

print("Error: Bad request, problem with bucket")

elif e.response['Error']['Code'] == "403":

print("Error: Forbidden, bucket forbidden")

elif e.response['Error']['Code'] == "AccessDenied":

print("Error: Access denied")

elif e.response['Error']['Code'] == "InvalidBucketName":

print("Error: Invalid bucket name")

elif e.response['Error']['Code'] == "NoSuchBucket":

print("Error: No such bucket")

else:

raise

return

if __name__ == '__main__':

main()

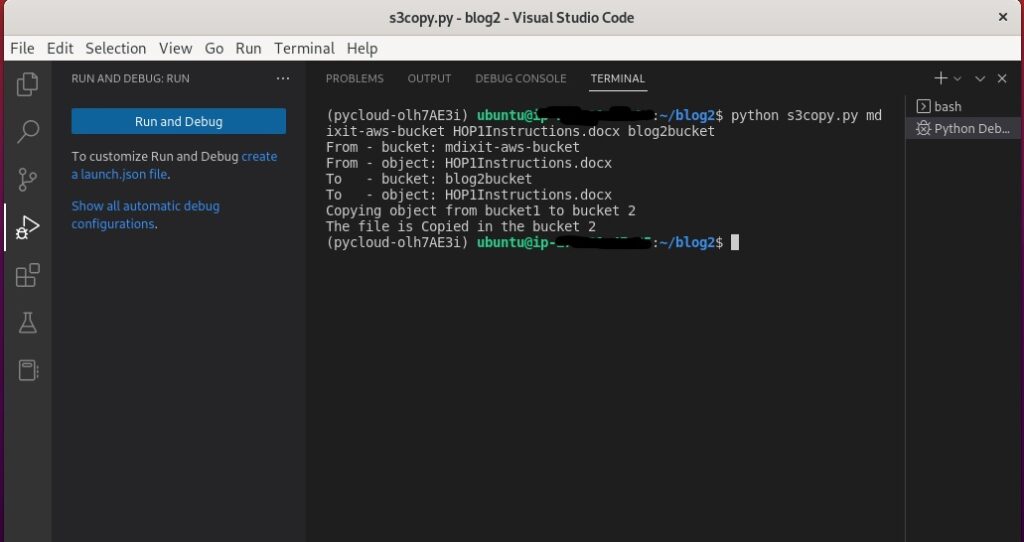

Run the following command to execute the script

python <file_name.py><bucket1_name><bucket1_file><bucket2>Results

The command line output will be displayed in this way after execution.

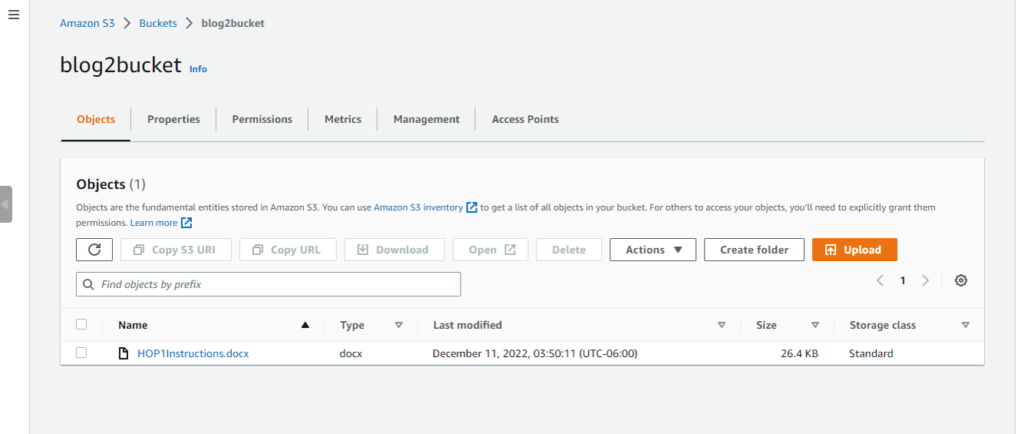

Now we need to take a look at the the bucket2 which is named ‘blog2bucket’ where the object from the bucket1 named ‘mdixit-aws-bucket’ will be copied file named: HOP1Instructions.docx.

As we observed in S3 before execution section, the S3 bucket named ‘blog2bucket’ was empty. Now we can see that file was successfully copied from bucket1 to bucket2 successfully.

Thank you for reading!